If you are an early adopter of Docker, chances are that you are running it on a popular distro like Red Hat, CentOS or Ubuntu. This was the case with a few web application systems we managed. We soon realized that the extra services that CentOS ran just ate up resources and was not really required by Docker. It only needs a kernel.

We started looking for operating systems that had a minimal resource footprint and could maximize Docker resource allocation. CoreOS and Project Atomic soon came out as good candidates for our server system and we gave CoreOS a go. Today I’ll go over some benefits of using a minimal OS like CoreOS that’s customized for using Docker containers.

How is CoreOS different?

CoreOS is a Just Enough OS (JEOS) operating system which focuses on doing one thing very well – keeping the base operating system secure at all times. CoreOS achieves this by separating the operating system layer from the applications. Applications are run from a container, with it’s dependencies and associated vulnerabilities handled separately.

CoreOS then adds components for distributed computing and clustering, which makes CoreOS clusters highly reliable and scalable. In effect it gives web application developers a low price platform to resolve 3 core issues – security, reliability and performance.

Why use CoreOS with Docker?

Docker by itself solves a lot of issues, most notably the streamlining of DevOps, but when it is paired with a light-weight, cluster-optimized, secure operating system like CoreOS, it gives a secure environment that is highly available and scalable. Here are the most important benefits:

Security through auto-updates

CoreOS is less than 200 MB in size, and contains only what’s needed for containers to work and scale. It stores two versions of its OS image at any one time, and automatically updates the non-active image as soon as a patch is available. Then, it auto-schedules a reboot by co-ordinating with other members in the cluster (using a program called locksmith).

In a cluster we implemented recently, CoreOS took less than 40 seconds to reboot, and during that time the workload of this node was seamlessly transferred to another node by its fleet management process. With the update strategy set to “etcd-lock”, only one node rebooted at any given time, thereby maintaining enough cluster redundancy.

High availability

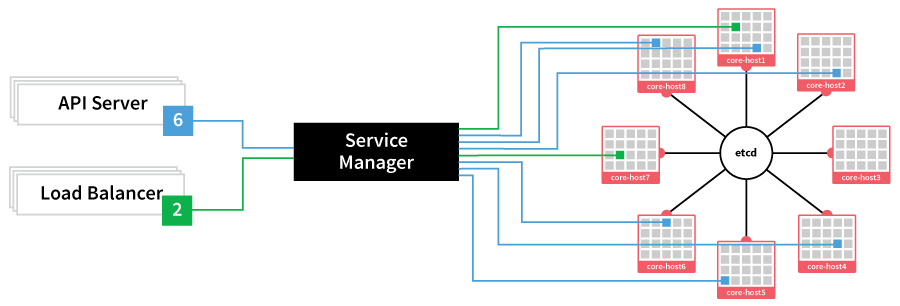

CoreOS comes with a program called fleet that makes it easy to manage a large pool of services from a central location. For eg., in a cluster we maintain, the following commands start a cluster of Apache servers, and display which all nodes are running it:

# fleetctl start apache.*

# fleetctl list-units

Using a configuration variable X-Fleet, fleet was configured to put each Apache instance in a different machine so that even when one went down, the service was just be moved to a different node in the fleet and always remained available.

High availability with fleetd (Image taken from CoreOS.com)

Easy scalability

In traditional server infrastructure, when you need another system to manage the load, you go about a long winded process of configuring a system from scratch, adding it to the configuration manager and configuring the load balancer.

In contrast, for the CoreOS systems we manage, we just get a cloud-config.yaml file from an existing node, and install a new system with it. Here’s the command:

# coreos-install -d /dev/sda -c cloud-config.yaml

As soon as the new server boots up, a service called etcd discovers the new node, and add it to the cluster. Then it’s just a matter of starting an app in the new node using the fleet service. All done in a matter of minutes.

Easy configuration management

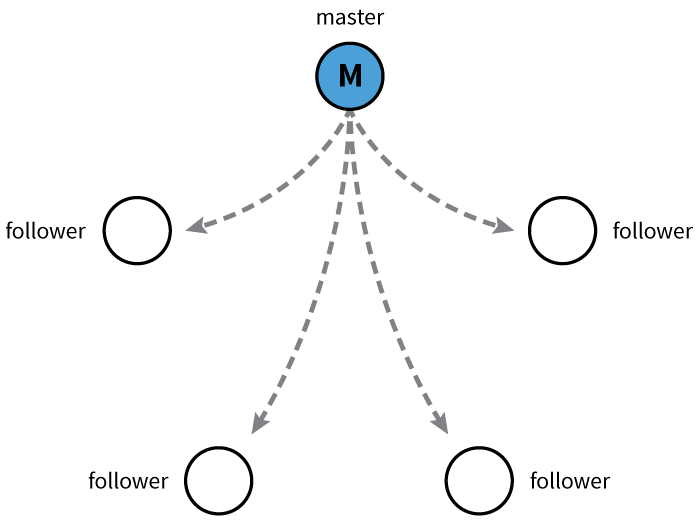

One issue with scaling is that you’ll need an easy way to manage your cluster configuration. Tools like Chef, Puppet and Ansible give you that option, but with CoreOS it’s a bit more easier. You can update the configuration details in one node, and it gets instantly replicated in the whole cluster. One node in the cluster acts as the “master”, and if it goes down, another becomes the master, seamlessly maintaining the cluster configuration information.

Etcd configuration management (Image taken from CoreOS.com)

Conclusion

Docker and the tools in its ecosystem solves many bottlenecks of traditional systems. For eg., as we’ve seen in previous posts, Cockpit when used with Docker can make configuration management and monitoring pretty easy. It makes DevOps very efficient.

But when CoreOS is used with Docker, you bring out-of-the-box security, scalability and reliability into the picture. In many ways CoreOS + Docker is not a full replacement to the capabilities of a traditional web application infrastructure, but with the pace of growth for these two technologies, it is worth considering an infrastructure change into CoreOS + Docker.

Bobcares server administrators routinely help webmasters and service providers configure their infrastructure and keep their servers secure and responsive. Our server management services cover 24/7 monitoring, emergency administration, periodic security hardening, periodic performance tuning and server updates.

SEE HOW YOU CAN SETUP A RELIABLE SERVER INFRASTRUCTURE

0 Comments