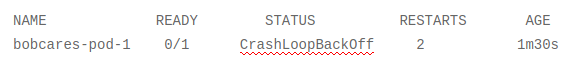

CrashLoopBackOff is a Kubernetes error which is very common and indicates a pod that is constantly crashing in an endless loop.

As part of our Server management service, Bobcares responds to all inquiries, large and small.

Let’s take a closer look at the CrashLoopBackOff error in Kubernetes.

CrashLoopBackOff Kubernetes Error

Using the

kubectl get pods

Common Causes of CrashLoopBackOff Error

A number of reasons can trigger the CrashLoopBackOff error. Below are few of the causes:-

- Insufficient resources: The container will not load due to a shortage of resources.

- Locked file: A file has previously been locked by another container.

- Locked database: Other pods are using and locking the database.

- Failed reference: Scripts or binaries we refer aren’t on the container.

- Setup error: A problem with Kubernetes init-container setup

- Config loading error: A server cannot load the configuration file.

- Misconfigurations: A general misconfiguration of the file system

- Connection issues: DNS or kube-DNS is unable to communicate with a third-party service.

- Deploying failed services: An attempt to deploy previously failed services/applications

Diagnosis and Resolution

The best way to identify the root cause of the error is to go through the list of potential causes one by one, beginning with the most common ones.

Look for “Back Off Restarting Failed Container”

- Firstly, run

.kubectl describe pod [name] - If the kubelet sends us Liveness probe failed and Back-off restarting failed container messages, it means the container is not responding and is in the process of restarting.

- If we receive the back-off restarting failed container message, it means that we are dealing with a temporary resource overload as a result of a spike in activity.

- To give the application a larger window of time to respond, adjust periodSeconds or timeoutSeconds.

If this was not the problem, move on to the next step.

Look at the logs from the previous container instance

If the pod details didn’t reveal anything, we should look at the information from the previous container instance. To get the last ten log lines from the pod, run the following command.

kubectl logs --previous --tail 10Then, look through the log for clues as to why the pod keeps crashing. If we can’t solve the problem, we’ll move on to the next step.

Examine the Deployment Logs

- Firstly, to get the kubectl deployment logs, run the following command:

kubectl logs -f deploy/ -n - This could also reveal problems at the application level.

- Finally, if all of the above fails, we’ll perform advanced debugging on the container that’s crashing.

Advanced Debugging: CrashLoop Container Bashing

To gain direct access to the CrashLoop container and identify and resolve the issue that caused it to crash, follow the steps below.

Step 1: Determine the entrypoint and cmd

To debug the container, we’ll need to figure out what the entrypoint and cmd are. Perform the following actions:

- Firstly, to pull the image, type

.docker pull [image-id] - Then,run

to find the container image’s entrypoint and cmd.Docker inspect [image-id]

Step 2: Change the entrypoint

We’ll need to temporarily change the entrypoint in the container specification to

tail -f /dev/nullStep 3: Set up debugging software

We should be able to use the default command line kubectl to execute into the buggy container. Make sure we have debugging tools installed (e.g., curl or vim) or add them. We can use this command in Linux to install the tools we require:

sudo apt-get install [name of debugging tool]Step 4: Verify that no packages or dependencies are missing.

Check for any missing packages or dependencies that are preventing the app from starting. If any packages or dependencies are missing, provide them to the application and see if it resolves the error. Proceed to the next step if no missing files are there or if the error persists.

Step 5: Verify the application’s settings

Examine the environment variables to ensure they are correct. If that isn’t the case, the configuration files may be missing, resulting in the application failing.We can use

CurlIf any configuration changes are required, such as the username and password for the database configuration file, we can do so with vim. We’ll need to look into some of the less common causes, If the problem was not caused by missing files or configuration.

The CrashLoopBackOff Error and How to Avoid It

Here is a list of best practises we can use to avoid getting the

CrashLoopBackOff- Configure and double-check the files

The

error can be caused by a misconfigured or missing configuration file, preventing the container from starting properly. Before deploying, ensure that all files are present and properly configured.CrashLoopBackOffFiles are typically stored in

. To see if the target file exists, we can use commands like ls and find. We can also investigate files with cat and less to ensure that there are no misconfiguration issues./var/lib/docker - Be Wary of Third-Party Services

If an application uses a third-party service and calls to that service fail, the problem is with the service itself. Issues with the SSL certificate or network issues are the cause of most of the errors. So, we need to ensure that both are operational. To test, we can log into the container and use

to manually reach the endpoints.curl - Examine the Environment Variables

The

error is frequently caused by incorrect environment variables. Containers that require Java to run frequently have their environment variables incorrectly set. So,check the environment variables with env to ensure they are correct.CrashLoopBackOff - Examine Kube-DNS

The application could be attempting to connect to an external service, but the kube-dns service is not operational. We simply need to restart the kube-dns service in order for the container to connect to the external service.

- Check for File Locks

As previously stated, file locks are a common cause of the CrashLoopBackOff error. So, ensure that we inspect all ports and containers to ensure that none are being used by the incorrect service. If they are, terminate the service that is occupying the required port.

[Looking for a solution to another query? We are just a click away.]

Conclusion

To sum up, our Support team described the CrashLoopBackOff error in Kubernetes.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

0 Comments