What is CI/CD in ML Pipelines?

CI/CD (Continuous Integration and Continuous Delivery/Deployment) in machine learning pipelines is a specialized approach designed to automate and streamline the lifecycle of machine learning model development, testing, and deployment.

It adapts traditional CI/CD principles to address the unique challenges of machine learning, such as managing data dependencies, ensuring reproducibility, and continuously monitoring model performance. By implementing CI/CD in ML pipelines, organizations can achieve faster iterations, maintain model quality, and deploy updates with greater confidence.

CI/CD consists of two main types:

Continuous Integration (CI): The Foundation of Modern Software Development

Continuous Integration, in essence, represents a transformative approach to software development, focusing on frequent and systematic code integration. At its core, CI is, ultimately, about creating a collaborative environment where developers consistently merge their code changes into a central repository.

Key Characteristics of CI:

- Developers integrate code changes multiple times daily

- Automated build processes verify code integrity

- Immediate testing and validation of code modifications

- Rapid identification and resolution of potential bugs

The primary goal of CI is to reduce integration challenges, minimize development conflicts, and create a more streamlined, efficient development process. By automating builds and tests, teams can quickly detect and address potential issues before they escalate.

Continuous Delivery/Deployment (CD): Enhancing Software Release Efficiency

Continuous Delivery and Continuous Deployment, in fact, represent advanced methodologies in software development, aimed at automating and refining the release process.

CD Approaches:

Continuous Delivery:

- Prepares code changes for production release.

- Provides the option for manual deployment triggers.

- Ensures the codebase is always in a deployable state.

Continuous Delivery:

- Automatically deploys updates to production.

- Requires all updates to pass rigorous automated tests.

- Removes the need for manual intervention in deployment processes.

The Synergy of CI/CD

Together, CI/CD forms a comprehensive framework for software development that:

- Reduces manual errors.

- Speeds up development cycles.

- Promotes better collaboration among teams.

- Enhances the quality of software.

- Improves responsiveness to market demands.

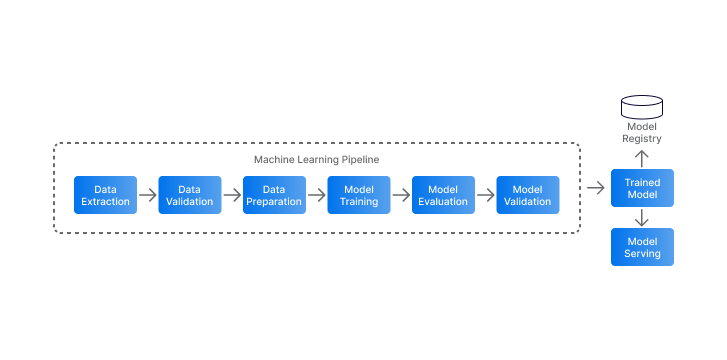

What is an ML Pipeline?

An ML pipeline is, in essence, a comprehensive, end-to-end workflow designed to convert raw data into deployable machine learning models. It achieves this transformation through a well-defined sequence of interconnected stages. Unlike traditional data processing methods, ML pipelines offer a structured and automated approach to turning complex data into intelligent, predictive solutions.

Core Stages of an ML Pipeline:

- Data Collection and Preprocessing: Gathering and cleaning raw data.

- Feature Engineering and Selection: Identifying and preparing key features.

- Model Training and Optimization: Developing and fine-tuning the model.

- Rigorous Model Evaluation: Validating model accuracy and performance.

- Seamless Model Deployment: Integrating the model into production environments.

- Continuous Performance Monitoring: Tracking and maintaining model efficacy.

Key Characteristics of ML Pipelines:

- Sequentiality: Each stage follows a specific and dependent order.

- Interconnectedness: The output of one stage serves as the input for the next.

- Repeatability: Processes can be reproduced and refined consistently.

- Automation: Reduces the need for manual intervention throughout the workflow.

CI/CD in ML Pipelines

CI/CD in machine learning adapts traditional software development automation principles to meet the unique demands of machine learning workflows. In doing so, this approach addresses specific challenges, streamlining the development process to be more efficient, reliable, and scalable.

Benefits of CI/CD in ML Pipelines

1:Accelerated Development Cycles

- Shorten the time from model conception to production.

- Enable rapid experimentation with new ideas.

- Minimize delays caused by development bottlenecks.

Minimized Manual Intervention

- Automate repetitive and time-consuming tasks.

- Reduce the likelihood of human error.

- Improve overall operational efficiency.

Enhanced Model Reproducibility

- Ensure consistent and repeatable model development processes.

- Provide clear and transparent model versioning.

- Support collaborative development across teams.

Consistent Model Quality Maintenance

- Use standardized quality control measures.

- Continuously validate and monitor model performance.

- Uphold high reliability and accuracy standards.

Rapid Iteration and Deployment

- Enable frequent and fast model updates.

- Adapt quickly to evolving business needs.

- Maintain a competitive edge in technology and innovation.

Best Practices for Implementing CI/CD in ML Pipelines

Implementing CI/CD in machine learning demands, in fact, a holistic approach to ensure efficient, reliable, and scalable model development and deployment. Below, therefore, is a detailed guide to one of the key best practices:

1. Version Control Management: Ensuring Comprehensive Tracking and Reproducibility

Version control is crucial in machine learning pipelines, as it safeguards the integrity and traceability of the entire project ecosystem.

Tools like Git enable teams to systematically manage critical components such as code, model configurations, and data pipelines. This ensures every modification is well-documented, allowing developers to track the evolution of their project effortlessly.

Primary Objectives of Version Control:

- Maintain a robust historical record of changes.

- Facilitate collaborative development among team members.

- Provide reliable rollback capabilities when needed.

Data, often considered the backbone of ML algorithms, requires meticulous tracking in order to ensure that all downstream processes can replicate experimental conditions accurately.

Key Strategies for Effective Version Control:

- Adopt consistent branching and merging practices to streamline collaboration.

- Log all dependencies, ensuring they are well-documented.

- Leverage containerization tools like Docker and Conda to encapsulate environments.

- Maintain clear and detailed documentation of configuration changes for transparency.

2. Comprehensive Automated Testing: Ensuring ML Pipeline Reliability

Automated testing is a vital quality assurance practice for machine learning pipelines. Moreover, by adopting a multi-dimensional approach to testing, teams can thoroughly validate the various components and workflows of their ML systems, thereby ensuring reliability and performance.

Testing Dimensions:

- Unit Tests:Verify the functionality of individual pipeline components in isolation.

- Integration Tests:Confirm that different modules within the pipeline work seamlessly together.

- Performance Tests:Assess the model’s performance against predefined benchmark datasets.

- Data Validation:Check the quality, consistency, and integrity of the input data rigorously.

Benefits of Automated Testing:

These testing strategies, therefore, enable early detection of potential issues in the development cycle, helping to:

- Prevent complications in downstream processes.

- Enhance the reliability and robustness of models before deployment.

- Ensure the overall integrity of the ML pipeline.

By integrating comprehensive automated testing, teams can, therefore, build confidence in their systems and deliver consistent, high-quality results.

3. Robust Continuous Integration Pipelines: Streamlining Development

Continuous Integration (CI) pipelines are critical for ensuring code quality and consistency in machine learning projects. Furthermore, the goal is to create a systematic, automated workflow that efficiently validates and integrates code changes, ultimately streamlining the development process.

Key Pipeline Components:

- Build Stage: Compiling code and setting up standardized execution environments.

- Test Stage: Conducting thorough validation of code changes and assessing model performance.

- Artifact Management: Systematically storing and versioning model binaries and training datasets.

By incorporating tools such as Jenkins, GitHub Actions, and GitLab CI, teams can automate these processes, ensuring consistent and reproducible development environments. This approach streamlines workflows and helps maintain high standards across the development lifecycle.

4. Flexible Continuous Deployment: Strategic Model Release

Continuous Deployment in machine learning pipelines requires a thoughtful approach that balances innovation with stability. The goal is to enable rapid model updates while minimizing disruptions to the production environment.

Deployment Best Practices:

- Create Staging Environments:Set up environments that replicate production conditions for testing.

- Automate Deployment Processes: Streamline and automate the deployment of models to reduce manual effort.

- Implement Incremental Rollout Mechanisms: Gradually release updates to control their impact and detect issues early.

- Develop Robust Rollback Strategies: Prepare for quick reversion to previous versions in case of issues.

- Integrate Version Control with Deployment Workflows: Ensure smooth coordination between version control systems and deployment processes.

By adopting these strategies, organizations can efficiently manage the complex task of moving machine learning models from development to production, ensuring stability while enabling quick updates.

5. Continuous Monitoring and Feedback: Maintaining Model Performance

Continuous monitoring is, without a doubt, essential for ensuring the long-term reliability and performance of machine learning models. Additionally, by setting up thorough tracking systems, teams can proactively detect and resolve potential issues.

Monitoring Essentials:

- Track Detailed Model Performance Metrics: Continuously measure and analyze key performance indicators.

- Establish Robust Logging and Alerting Systems: Set up systems to log relevant events and trigger alerts for any anomalies.

- Create Iterative Feedback Loops: Implement mechanisms for continuous feedback to refine and improve the model.

- Detect Critical Issues: Monitor for issues like data drift or performance degradation.

- Implement Automated Retraining and Optimization: Automate the process of retraining models to adapt to new data and optimize performance.

These strategies help maintain high-quality, consistent model performance while enabling timely intervention when necessary.

6. Automation Emphasis: Reducing Human Error and Increasing Efficiency

Workflow automation is, indeed, a key strategy for enhancing machine learning pipelines, as it focuses on minimizing manual intervention and reducing the risk of errors. Furthermore, by automating crucial stages of the ML lifecycle, organizations can achieve higher levels of consistency and reliability.

Key Automation Domains:

- Data Ingestion: Automate the collection, import, and preparation of raw data.

- Preprocessing: Standardize data cleaning, transformation, and feature engineering processes.

- Model Training: Execute training processes with minimal human involvement.

- Testing: Implement comprehensive automated validation checks.

- Deployment: Streamline the model release and integration processes.

The primary goal, ultimately, is to establish a self-sustaining pipeline that operates with minimal human oversight. Consequently, this reduces human error, accelerates development, and ensures reproducible results.

7. Comprehensive Testing Strategy: Ensuring Model Quality and Reliability

A strong testing approach is, without question, essential for ensuring the high quality and reliability of machine learning models. In addition, this involves a multi-faceted strategy that goes beyond traditional validation methods.

Testing Strategy Components:

- Developing Diverse Testing Methodologies: Employ a variety of testing techniques to assess different aspects of the model.

- Implementing Regular Model Validation Processes: Conduct ongoing validation to ensure the model’s robustness.

- Establishing Consistent Quality Standards: Apply uniform quality benchmarks across all stages of the pipeline.

By adopting a comprehensive testing framework, organizations can:

- Identify model weaknesses early in the development process.

- Ensure consistent performance across various datasets.

- Uphold high standards of model reliability and accuracy.

8. Collaborative Culture: Breaking Down Organizational Silos

Successful ML pipeline development thrives on breaking down traditional organizational barriers and, as a result, fostering a culture of cross-functional collaboration. As a result, this approach ensures that teams work together towards shared objectives.

Collaboration Strategies:

- Encouraging Open Communication: Facilitate dialogue between data scientists, engineers, and operations teams.

- Creating a Shared Understanding: Align all teams on project goals and challenges.

- Developing Collaborative Tools and Platforms: Build tools that support team collaboration and efficiency.

- Promoting Knowledge Sharing and Joint Problem-Solving: Encourage collective problem-solving and the exchange of expertise.

By dismantling silos, organizations can leverage diverse skills, accelerate innovation, and create more robust machine learning solutions.

9. Detailed Documentation: Knowledge Management and Transparency

Documentation is, without a doubt, a vital tool for preserving knowledge and ensuring clear communication in ML pipeline development. Moreover, it provides a thorough record of processes, configurations, and best practices.

Documentation Best Practices:

- Comprehensively Documenting CI/CD Processes: Capture the details of all CI/CD workflows and procedures.

- Recording Detailed Configuration and Implementation Details: Maintain an accurate record of configurations and how they were implemented.

- Maintaining Clear, Accessible Guidelines: Provide easily accessible guidelines for team members.

- Creating Version-Controlled Documentation Repositories: Store documentation in version-controlled systems for better tracking and management.

Effective documentation ensures:

- Smooth knowledge transfer between team members.

- Easier troubleshooting and maintenance.

- A consistent understanding of pipeline architecture.

10. Infrastructure Considerations: Strategic Planning for ML Success

Strategic infrastructure planning is essential for developing scalable and efficient machine learning pipelines. Moreover, it involves making informed decisions about computational resources and deployment environments to ensure long-term success.

Infrastructure Planning Dimensions:

- Selecting Appropriate Infrastructure: Choose between cloud-based or on-premises solutions based on project needs.

- Evaluating Computational Scalability Requirements: Assess the scalability needs to handle increasing data or model complexity.

- Managing Infrastructure Costs Effectively: Optimize costs while meeting infrastructure demands.

- Ensuring Flexibility for Future Technological Advancements: Plan for infrastructure that can adapt to future technological changes.

Key considerations include:

- Performance requirements

- Cost optimization

- Scalability potential

- Compliance and security needs

11. Security and Compliance: Safeguarding ML Infrastructure and Data

Security and compliance are essential pillars of a reliable machine learning pipeline. Furthermore, organizations need to implement robust security measures to protect sensitive data, adhere to regulations, and, consequently, maintain the integrity of their systems throughout the ML lifecycle.

Key Security Dimensions:

- Data Encryption: Use strong encryption protocols to secure data both at rest and in transit.

- Access Control: Implement role-based authentication mechanisms to restrict unauthorized access.

- Audit Logging: Maintain detailed records of deployments and access activities for traceability.

- Regulatory Compliance: Ensure the pipeline adheres to industry-specific legal and regulatory requirements.

The objective, ultimately, is to establish a secure framework that protects intellectual property, prevents unauthorized intrusions, and, as a result, ensures transparency in ML operations.

12. Modular Pipeline Design: Boosting Flexibility and Maintainability

A modular pipeline design is a strategic method for constructing adaptable, efficient, and maintainable machine learning workflows. Moreover, by decomposing complex pipelines into smaller, independent components, organizations can enhance their system’s scalability and, in turn, simplify development processes.

Modular Design Principles:

- Decomposition: Break pipelines into discrete, manageable components that operate independently.

- Individual Module Testing: Enable targeted testing and optimization of each module.

- Clear Interfaces: Define explicit communication pathways between pipeline stages to ensure seamless integration.

- Component Flexibility: Design modules for easy updates, replacements, or enhancements.

Key Advantages:

- Scalability: Simplifies scaling efforts by isolating growth to specific modules.

- Reduced Complexity: Focuses on smaller, manageable pipeline sections to improve clarity.

- Efficient Development: Enables parallel development and faster iterations.

- Easier Maintenance: Facilitates pinpointing and resolving issues without affecting the entire pipeline.

Adopting a modular design empowers teams to build machine learning pipelines that are both robust and adaptable to evolving business needs.

13. Right Tooling: Strategic Technology Selection

Choosing the right tools is vital for designing and maintaining efficient machine learning pipelines. Furthermore, a well-defined tooling strategy ensures alignment with project goals, compatibility with existing infrastructure, and long-term scalability.

Tooling Selection Criteria:

- Testing Support: Select tools that support comprehensive testing frameworks, including unit, integration, and performance testing.

- Project-Specific Requirements: Ensure tools align with unique objectives, such as specialized data preprocessing or model deployment needs.

- Infrastructure Compatibility: Choose tools that seamlessly integrate with existing systems, minimizing configuration efforts.

- Scalability and Future-Proofing: Prioritize tools capable of handling increased workloads.

Best Practices for Tool Selection:

- Thorough Evaluations: Conduct in-depth assessments of tools based on their feature sets, user community, and case studies.

- Avoid Mid-Development Changes: Standardize on selected tools early to prevent disruptions and rework during pipeline development.

- Flexibility and Integration: Choose tools that offer modular design, allowing integration with other platforms and technologies.

A strategic approach to tooling empowers teams to build resilient, scalable pipelines tailored to their specific business and technical requirements.

14. Continuous Learning: Adaptive Pipeline Management

Continuous learning is a proactive strategy that not only ensures the sustained relevance and effectiveness of machine learning pipelines but also emphasizes iterative improvement. Moreover, it supports technological adaptation and systematic optimization, keeping pipelines aligned with evolving needs.

Continuous Learning Strategies:

- Regular Performance Monitoring: Continuously track key metrics to identify performance trends and anomalies in real time.

- Systematic Process Improvement: Regularly assess workflows and refine processes to eliminate inefficiencies and improve accuracy.

- Proactive Adaptation to New ML Technologies: Stay informed about advancements in machine learning tools and frameworks and integrate them strategically.

- Implementing Feedback Loops: Collect insights from model outcomes, user interactions, and system behavior to inform iterative updates.

Key Objectives:

- Pipeline Relevance: Ensure pipelines adapt to changing data characteristics, business objectives, and market trends.

- Optimization Opportunities: Identify and address areas for improvement, such as reducing latency or improving scalability.

- Technological Advancements: Incorporate emerging methodologies to maintain a competitive edge in machine learning operations.

Adopting continuous learning practices, in turn, fosters resilience and innovation, ensuring machine learning pipelines remain efficient, effective, and future-ready.

15. Performance Optimization: Maximizing Computational Efficiency

Performance optimization is critical for ensuring that machine learning pipelines operate efficiently, utilizing resources effectively while maintaining high throughput and reliability. This involves streamlining processes and addressing bottlenecks to achieve optimal computational performance.

Optimization Techniques:

- Task Parallelization: Split tasks into parallel processes to reduce overall execution time and enhance pipeline efficiency.

- Individual Pipeline Stage Optimization: Analyze and refine each stage of the pipeline, such as data preprocessing, model training, and deployment, for maximum efficiency.

- Systematic Reduction of Execution Time: Minimize delays by identifying redundant processes and streamlining workflows.

- Resource Allocation Management: Dynamically allocate computational resources to match task requirements, ensuring no underutilization or resource wastage.

Strategic Approaches:

- Identifying Computational Bottlenecks: Use profiling tools to pinpoint areas where performance lags and prioritize improvements.

- Implementing Efficient Processing Algorithms: Leverage optimized algorithms and libraries tailored to machine learning tasks to reduce processing time.

- Leveraging Advanced Computational Resources: Utilize cutting-edge hardware (e.g., GPUs, TPUs) and cloud-based solutions to scale effectively.

- Continuous Performance Measurement and Improvement: Regularly monitor pipeline performance metrics and iteratively refine the processes.

By integrating these practices into ML pipelines, organizations can, as a result, accelerate development cycles, optimize resource usage, and maintain high-quality outputs, ensuring that CI/CD workflows remain robust, scalable, and efficient.

[Want to learn more about CI/CD in ML Pipelines? Click here to reach us.]

Conclusion

Implementing CI/CD in machine learning pipelines is essential for building efficient, scalable, and reliable workflows that meet the dynamic demands of modern businesses. By adopting best practices such as version control, automated testing, modular design, and continuous monitoring, organizations can streamline model development, ensure consistent quality, and accelerate deployment cycles.

These strategies not only reduce operational bottlenecks but also foster collaboration, enhance security, and enable rapid adaptation to evolving technologies.

Bobcares AI support and development services are designed to help organizations seamlessly integrate and optimize CI/CD pipelines for machine learning. From ensuring efficient model deployment to providing robust infrastructure support, Bobcares empowers businesses to unlock the full potential of their AI initiatives.

By partnering with experts like Bobcares, organizations can confidently implement these best practices and deliver high-quality ML solutions that are both agile and future-ready.

0 Comments