Learn how to deploy Containerized Web Applications on GKE. Our Kubernetes Support team is here to help you with your questions and concerns.

Deploying containerized web application on GKE

Packaging a web application in a Docker container image, and running that container image on a Google Kubernetes Engine cluster can be a walk in the park with the right guide.

This will also allow us to deploy the web application as a load-balanced set of replicas that can be scaled up or down as needed.

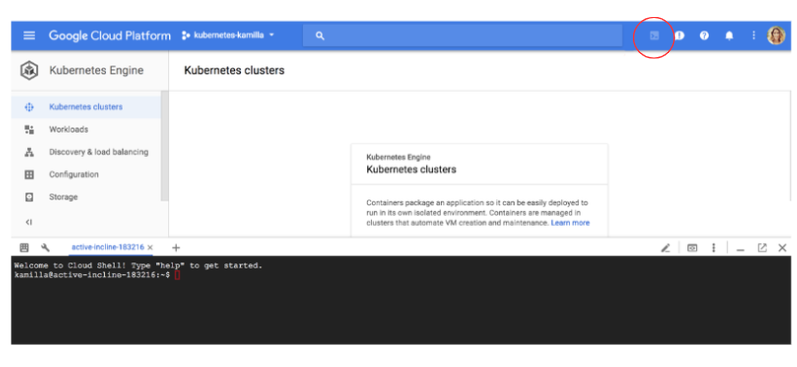

Before we begin, we would like to point out that our Engineers will use a Google Cloud Shell interface that comes with gcloud, docker, and kubectl command-line tools.

We can start using it by heading to the Kubernetes Engine, and clicking the Activate Google Cloud Shell button at the top right side of the console window.

This will open up a Cloud Shell session at the bottom of the console and display a command-line prompt.

We can set default configuration values with these commands:

$ gcloud config set project PROJECT_ID_

$ gcloud config set compute/zone us-west1-a

Now, let’s get started. This guide is made up of the following 6 steps:

- Create a container cluster

- Deploy application

- Expose the application to the Internet

- Scale up the application

- Deploying the sample app to GKE

- Clean up

1. Create a container cluster

- To begin with, create a container cluster to run the container image.

- Then, run this command:

$ gcloud container clusters create bobcares-cluster –num-nodes=3This will create a three-node cluster.

Our experts would like to point out that this will take a few minutes.

- Now, we can view the cluster’s three worker VM instances with this command:

$ gcloud compute instances list

2. Deploy application

- Now, we will be able to use Kubernetes to deploy applications to the cluster.

- The kubectl command-line tool helps deploy and manage applications on a Kubernetes Engine cluster.

For example, we can create a simple nginx docker container as seen here:

$ kubectl run bobcares-nginx --image=nginx --port 80 - Then, we can see the Pod we created by the deployment with this command:

kubectl get pods

3. Expose the application to the Internet

Our experts would like to point out that the containers we run on Kubernetes Engine cannot be accessible from the Internet. This is because they do not have external IP addresses.

Hence, we can use one of the following methods to make our HTTP(S) web server application publicly accessible:

- Expose the nginx deployment as a service internally

This method involves exposing the application to traffic from the Internet which will create a TCP Load Balancer and external IP address. In other words, a TCP load balancer works for HTTP web servers.

However, they do not terminate HTTP(S) traffic as they do not look for individual HTTP(S) requests. At the end of the day, Kubernetes Engine does not carry out health checks for TCP load balancers.

- To ensure the bobcares-nginx deployment is reachable within the container cluster we have to create a service resource:

$ kubectl expose deployment bobcares-nginx --target-port=80 –type=NodePort - Then, we can verify the service creation was successful and that a node port was allocated and run with this command:

$ kubectl get service bobcares-nginx

- To ensure the bobcares-nginx deployment is reachable within the container cluster we have to create a service resource:

- Create an Ingress resource

Alternatively, we can opt to expose the application as a service internally as well as create an Ingress resource with an ingress controller which will create the HTTP(S) Load Balancer. The HTTP(S) load balancer terminates HTTP(S) requests.

This helps make better context-aware load-balancing decisions. Furthermore, it supports features like customizable URL maps and TLS termination.

Additionally, Kubernetes Engine automatically configures health checks for HTTP(S) load balancers.

Here, Ingress is implemented via Cloud Load Balancing on Kubernetes Engine. When we create an Ingress in our cluster, Kubernetes Engine will create an HTTP(S) load balancer and then configure it to route traffic to our application.

Here is a quick example of a basic yaml configuration file. It defines an Ingress resource that directs traffic to the service:

apiVersion: v1 kind: Service metadata: name: bobcares-nginx annotations: cloud.google.com/load-balancer-type: “Internal” labels: app: echo spec: type: LoadBalancer ports: - port: 80 protocol: TCP- First, wrap the content above in a yaml file with this command:

$ echo '... [yaml_text_here]' > basic-ingress.yaml - Then, run:

$ kubectl apply -f basic-ingress.yaml - Now, the Ingress controller in charge of our cluster will also create an HTTP(S) Load Balancer to route all external HTTP traffic to the service bobcares-nginx.

Furthermore, we need to deploy a controller as a pod in environments other than GCE/Google Kubernetes Engine.

- Next, we can find out the load balancer’s external IP address with this command:

$ kubectl get ingress basic-ingress

Furthermore, Kubernetes Engine allocates ephemeral external IP addresses to HTTP applications exposed via an Ingress by default. We can perform this action to convert it to a static IP address.

This option is considered the better method for load balancing and also offers more features. In this article, our Support Techs are sticking to the second option to create an Ingress resource with Ingress controller and HTTP(S) Load Balancer.

- First, wrap the content above in a yaml file with this command:

4. Scale up your application

- Now, we are going to add two additional replicas to our Deployment with this command:

$ kubectl scale deployment bobcares-nginx –replicas=3 - Then, we can see the new replicas running on our cluster with these commands:

$ kubectl get deployment bobcares-nginx

$ kubectl get pods

After the above steps, the HTTPS(S) Load Balancer will start routing traffic to the new replicas automatically.

5. Deploying the sample app to GKE

Kubernetes represents applications as Pods. These Pods are the smallest deployable unit in Kubernetes.

In most cases, Pods are deployed as a set of replicas. These can be scaled and distributed together across our cluster. We can deploy a set of replicas via a Kubernetes Deployment.

Here, we are going to create a Kubernetes Deployment to run quiz-app on our cluster. This Deployment has replicas.

- On the Cloud Shell

- First, we have to make sure we are connected to our GKE cluster:

gcloud container clusters get-credentials quiz-cluster --region REGION - Then, create a Kubernetes Deployment for our quiz-app Docker image.

kubectl create deployment quiz-app –image=REGION-docker.pkg.dev/${PROJECT_ID}/quiz-repo/quiz-app:v1 - Next, we have to set the baseline number of Deployment replicas to 3 as seen here:

kubectl scale deployment quiz-app –replicas=3 - Then, create a HorizontalPodAutoscaler resource for our Deployment:

kubectl autoscale deployment quiz-app --cpu-percent=80 --min=1 –max=5 - Now, we can see the created Pods with this command:

kubectl get pods

- First, we have to make sure we are connected to our GKE cluster:

- On the Console:

- First, head to the Workloads page in the Google Cloud console.

- Then, click add_box Deploy.

- Next, select Existing container image in the Specify container section.

- Now, click Select in the Image path field.

- Then, select the quiz-app image we pushed to Artifact Registry and click Select in the Select container image pane.

- At this point, we have to head to the Container section, click Done, and then click Continue.

- Now, go to the Configuration section, enter app for Key and quiz-app for Value under Labels.

- Then, head to Configuration YAML and click View YAML. This will open a YAML configuration file.

- We can click Close, then click Deploy.

- As soon as the Deployment Pods are ready, we will be taken to the Deployment details page.

- Furthermore, we have to note down the three running Pods for the quiz-app Deployment under Managed pods.

6. Clean up

According to our Support Team, we can avoid incurring charges to your Google Cloud account for the resources used in this tutorial by deleting the project that contains the resources, or keeping the project and deleting the individual resources.

Let’s take a look at each of these options:

- Delete the Service:

This method deallocates the Cloud Load Balancer created for our Service. We can do this by running:

kubectl delete service quiz-app-service - Delete the cluster:

We can delete the resources that make up the cluster by running this command:

gcloud container clusters delete quiz-cluster --region REGION - Delete the container images:

We can delete the Docker images we pushed to the Artifact Registry with this command:

gcloud artifacts docker images delete \

REGION-docker.pkg.dev/${PROJECT_ID}/quiz-repo/quiz-app:v1 \

--delete-tags --quiet

gcloud artifacts docker images delete \

REGION-docker.pkg.dev/${PROJECT_ID}/quiz-repo/quiz-app:v2 \

--delete-tags --quiet

[Need assistance with a different issue? Our team is available 24/7.]

Conclusion

In brief, our Support Experts demonstrated how to deploy Containerized Web Applications on GKE.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

0 Comments