The handling of IP addresses within a Kubernetes cluster is referred to as a Kubernetes IPAM cluster. Bobcares, as a part of our Server Management Service offers solutions to every query that comes our way.

Overview

- What is Kubernetes IPAM Cluster?

- Cluster API IPAM Provider In Cluster

- Benefits of Kubernetes IPAM Cluster

- Conclusion

What is Kubernetes IPAM Cluster?

Kubernetes can create, schedule, as well as manage the smallest deployable unit called a pod. Inside these pods, we can also find one or more containers that share networking and storage resources. Kubernetes Service creates a logical group of Pods and a set of rules for accessing them. Decoupling an app’s frontend and backend through services makes it easier to scale and manage.

In order to simplify communication between pods and external services as well as between nodes within the cluster, Kubernetes offers networking features. It is possible for pods inside the same cluster to communicate with one another thanks to each pod having its own IP address.

“Kubernetes IPAM (Internet Protocol Address Management) cluster refers to the IP address management within a Kubernetes cluster. IPAM providers give users the ability to manage the IP address assignment process for Cluster API Machines. Typically, it is only for non-cloud deployments. To utilize this provider, the infrastructure provider in use needs to support IPAM providers. Managing IP addresses for pods and services inside the cluster is the responsibility of IPAM in Kubernetes. For IPAM, Kubernetes uses a range of techniques, such as third-party plugins and solutions and integrated solutions like kube-proxy for networking and service discovery.

IPAM Setup | Different Ways

1. Plugins for Kubernetes Networking:

Kubernetes supports several networking plugins, including Flannel, Calico, Cilium, and others. In the cluster, these plugins manage IPAM and networking. Every plugin will handle IP address assignment and control differently.

2. External IPAM Solutions:

Some companies combine Kubernetes with third-party IPAM systems. So, these systems control IP address distribution and can use custom controllers or plugins to interface with Kubernetes.

3. Tailored IPAM Solutions:

Businesses with particular networking needs may create IPAM solutions that specifically suit their needs. These solutions can use custom controllers or plugins to combine with Kubernetes.

4. Cloud Provider Networking:

The networking solution offered by the cloud provider may take care of IP address management within the cluster if we’re running Kubernetes on AWS, Azure, or Google Cloud Platform.

Considerations including scalability, performance, and interoperability with other cluster components are crucial when creating a Kubernetes cluster. The IPAM solution used should be in line with the overall specifications and Kubernetes deployment framework, as it can affect these variables.

Cluster API IPAM Provider In Cluster

This IPAM provider uses Kubernetes resources to manage IP address pools for Cluster API. We can use it to easily replace DHCP or to use it as a reference implementation for IPAM providers. IPAM providers manage the allocation of IP addresses to Cluster API Machines. Usually, only non-cloud deployments can benefit from it. To use this provider, one needs the provider to support IPAM providers.

Important Features

1. Uses specialized Kubernetes resources to manage IP addresses within a cluster.

2. Address pools can be namespaced or cluster-wide.

3. Subnets, random address ranges, and/or individual addresses can be included in pools.

4. Supported IPv4 and IPv6 protocols

5. It is possible to exclude specific addresses, ranges, and subnets from a pool.

6. By default, doesn’t include well-known reserved addresses; however, each pool can alter this.

Setup Steps

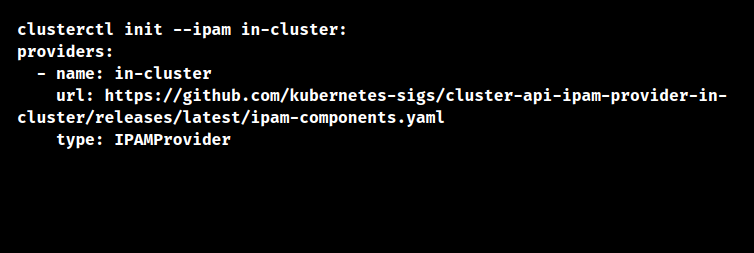

Clusterctl support is available with this provider. Adding the following to the $XDG_CONFIG_HOME/cluster-api/clusterctl.yaml will be necessary if we wish to install it using clusterctl init –ipam in-cluster, as it hasn’t been added to the built-in list of providers yet:

Usage

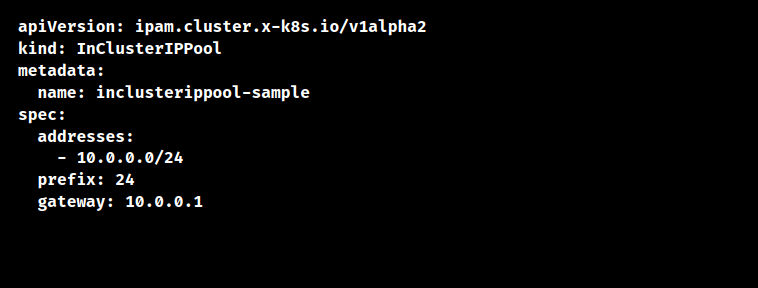

The InClusterIPPool and the GlobalInClusterIPPool are the two resources that are available with this provider to specify pools from which address allocation is possible. The former is namespaced, whereas the latter is cluster-wide, as the names imply. If not, they are the same. Although they also function with the GlobalInClusterIPPool, the following examples will all use the InClusterIPPool. This is how a basic pool that spans a whole /24 IPv4 network may appear:

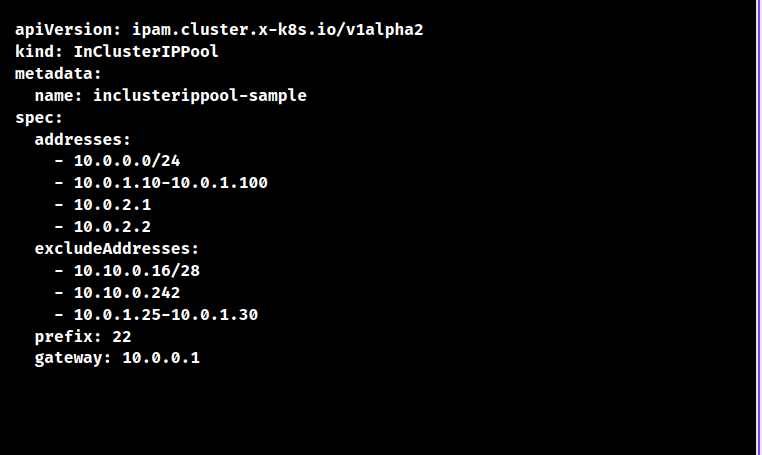

Although IPv6 is active as well, only one pool—consisting of either v4 or v6 addresses—may include both. We’ll use IPv4 in the examples to keep things simple. Individual addresses, arbitrary ranges, and CIDR notation are all supported in the addresses field. Subnets, addresses, and ranges can be kept out of the pool by using the excludedAddresses field.

The prefix must include all addresses included in the pool. The prefix is found using the first network in the addresses list and the prefix field, which defines the prefix’s length. In this instance, a validation issue would result from 10.1.0.0/24 in the addresses list. There will never be a gateway assigned. Addresses that are typically reserved won’t be assigned by default either. This is the first (network) and last (broadcast) address in the prefix for v4 networks. That would be 10.0.3.255 and 10.0.0.0 in the aforementioned example (the latter not being in the network anyhow). Networks running on V6 do not allow the initial address.

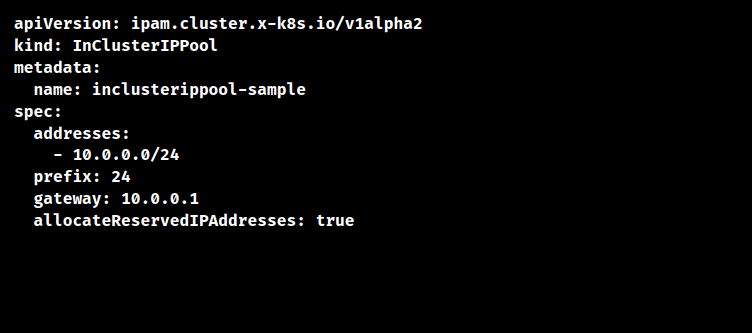

We can set allocateReservedIPAddresses to true if we wish to use every network included in the prefix. Both 10.0.0.0.0 and 10.0.0.255 will be assigned in the scenario that follows. The portal is still going to be blocked.

Benefits of Kubernetes IPAM Cluster

There are various advantages to implementing IPAM in a Kubernetes cluster, such as:

1. The cluster’s pods and services are efficiently assigned IP addresses thanks to Kubernetes IPAM.

2. Large clusters with hundreds of nodes and pods can be handled by IPAM solutions for Kubernetes since they are frequently scalable.

3. Flexible IP address range and allocation policy setup is possible with Kubernetes IPAM. In addition to configuring IP assignment behaviour according to particular needs, admins can specify unique CIDR ranges for pod and service IPs.

4. The communication channels between pods and external resources are governed by Kubernetes network policies. It gives admins control over traffic flow and the ability to carry out security policies based on IP addresses and namespaces.

5. IPAM solutions in Kubernetes reduce managing costs and manual intervention by automating the IP address assignment and management process.

6. High network service availability and resilience to failures are built into Kubernetes IPAM solutions.

7. IPAM solutions are able to isolate and segment network resources in scenarios where teams or different tenants share a single Kubernetes cluster. They provide safe and effective resource usage by enabling admins to set up distinct IP address pools and policies for various namespaces or tenant groups.

8. Solutions for Kubernetes IPAM are engineered to function flawlessly in a variety of deployment contexts, such as public cloud frameworks and on-premise data centres.

[Want to learn more? Reach out to us if you have any further questions.]

Conclusion

To sum up, there are a lot of advantages to using IPAM in a Kubernetes cluster, such as improved security, scalability, flexibility, and effective resource use. IPAM systems provide multi-tenancy, fault tolerance, and interoperability with a variety of deployment settings while ensuring seamless IP address assignment and management throughout the cluster. Kubernetes IPAM streamlines management and helps businesses create robust, dynamic containerized apps by automating network setup and policy enforcement.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

0 Comments