Learn how to Configure Kubernetes Rolling Update maxSurge. Our Kubernetes Support team is here to help you with your questions and concerns.

Configuring maxSurge in Kubernetes Rolling Updates

Did you know that the maxSurge and maxUnavailable properties in Kubernetes help control the rolling updates of a deployment?

Furthermore, these properties are specified in the spec.strategy.rollingUpdate section of a deployment manifest. Additionally, they play a key role in ensuring application availability.

Kubernetes uses rolling deployment as its default strategy. Here the existing pods’ version are replaced with a new one. This process involves gradually updating individual pods, ensuring there is no downtime in the cluster.

Additionally, a readiness probe in the rolling update verifies the readiness of a new pod before initiating the scaling down of pods with the old version. In case of any issues, the update can be halted and rolled back without impacting the entire cluster.

To execute a rolling update, we can simply update the image of our pods using the “kubectl set image” command. This action will automatically initiate a rolling update.

- What is maxSurge?

- Enable Rolling Updates

- Set maxSurge and maxUnavailable

- Rollback Changes

- Pause & Resume Rolling Update

- Advantages of rolling updates

What is maxSurge?

In Kubernetes, maxSurge is a property that defines the maximum number (or percentage) of additional pods that can be created during a rolling update. During such updates, Kubernetes replaces old pods with new ones for seamless application deployment without downtime.

In other words, the maxSurge parameter sets the threshold for the temporary increase in the total number of pods. Hence, it prevents it from going beyond a predefined limit. This control mechanism supports a controlled and gradual update process. It also maintains a certain level of availability for the application.

Enable Rolling Updates

Did you know that Kubernetes Deployments act as wrappers around ReplicaSets? In fact, ReplicaSets are Kubernetes controllers that are responsible for managing pods.

Deployments extend the capabilities of ReplicaSets by including features like health checks, orchestrated rolling updates, and seamless rollbacks.

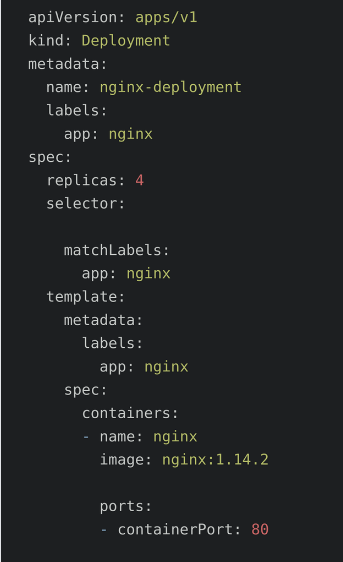

- To begin with, we have to create a yaml file with deployment specifications via a text editor.

For example:

- Then, save and exit the file.

- Now, it is time to create the deployment via the kubectl create command and the yaml file:

kubectl create -f nginx-test.yamlRemember to replace nginx-test.yaml with the name of the yaml we created in the previous step.

- Next, check the deployment with this command:

kubectl get deploymentThe output will let us know if the deployment is ready or not.

- Then, we have to check the ReplicaSets with this command:

kubectl get rsOur sample file mentioned four replicas so we will get an output displaying the status of these 4 replicas.

- Now, we can check if the pods are up with this command:

kubectl get pod

Set maxSurge and maxUnavailable Values

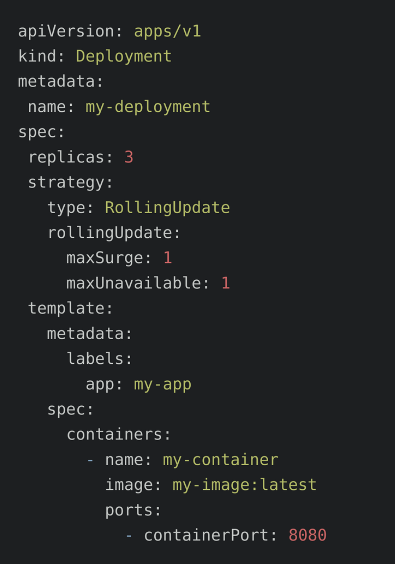

In order to set the maxSurge and maxUnavailable values, we have to edit the spec.strategy.rollingUpdate section of the deployment manifest.

For example:

Here, we are setting maxSurge and maxUnavailable values to 1. In other words, one additional pod can be created and one pod can be unavailable at a time during the rolling update.

Then, we can create the deployment with the kubectl command:

$ kubectl apply -f rollingupdate.yamlFurthermore, we can check the rollout status as seen below:

$ kubectl rollout status deployment/rollingupdate-strategyIf you come across a message similar to the one below, we have to run the command again to verify the rollout is successful.

Waiting for rollout to finish: 2 out of 4 new replicas have been updated…

Once the rollout is successful, we can view the Deployment with the kubectl get deployments command.

Then, we can kubectl get rs to check if the Deployment is updated.

As seen above, the kubectl’s rollout command is very handy. Our experts use it to check how our deployment is doing. The command waits till all of the Pods in the deployment have started successfully by default. After successful deployment, the command exits with return code zero to indicate success. If the deployment fails, the command exits with a non-zero code.

In case of deployment failure, the deployment process stops. However, the pods from the failed deployment persist.

In other words, in case of deployment failure, our environment has pods from old as well as new deployments. We can return to a stable, working state, with the rollout undo command. It will bring back the working pods as well as clean up the failed deployment.

$ kubectl rollout undo deployment rollingupdate-strategyFurthermore, Kubernetes relies on readiness probes to check the application. When an application instance responds positively to the readiness probe, the instance is considered ready for use. In other words, Readiness probes let Kubernetes know when an application is ready. However, if the application keeps failing, it will not respond positively to Kubernetes.

Rollback Changes

If something goes wrong during the app update, we can easily go back to the way it was before. Just use this kubectl command:

$ kubectl rollout history deployment/deployment-definitionThe output displays all the versions and we can choose the revision we want:

$ kubectl rollout undo deployment/deployment-definitionThis undoes the changes and brings our app back to the chosen version.

Pause & Resume Rolling Update

We can easily pause and resume rolling updates with kubectl rollout commands as seen below:

- To halt the update, use:

kubectl rollout pause deployment/deployment-definition - To resume the update, use:

kubectl rollout resume deployment/deployment-definition

Now, let’s suppose the pod deployment is undergoing a rolling update. In this case, one might wonder if this ensures zero downtime when the VPA recommendation is implemented.

According to our experts, the default RollingUpdate behavior for Deployments targets zero downtime. It constantly updates Pod instances. This is done by introducing new replicas and then removing older ones after the new ones are ready.

In fact, we can manage the unavailability level or the number of new pods created with the `maxUnavailable` and `maxSurge` fields as mentioned earlier. Modifying these variables lets us tailor the rolling update process to achieve zero downtime.

Advantages of Rolling Updates

Rolling updates support the gradual integration of changes. This offers flexibility and control throughout the application lifecycle in Kubernetes clusters. Let’s look at some of the key benefits:

- Rolling updates offer continuous availability by keeping pod instances active during upgrades.

- Developers can check the impact of changes in a production environment without affecting the user experience. This helps with testing and validation.

- Rolling updates don’t demand additional resources for the cluster. Hence it is a cost-effective deployment strategy compared to other methods.

- It avoids the need for manual migration of configuration files. Complex upgrades can be done easily by making simple changes to a deployment file.

We can carry out a rolling update to update Docker images with these 3 methods:

- set image

# format

$ kubectl set image deployment = --record

# example

$ kubectl set image deployment nginx nginx=nginx:1.11.5 –record - edit

# format

$ kubectl edit deployment --record

# example

$ kubectl edit deployment nginx –record

- replace

We have to change the container image version in the YAML file.

spec:

containers:

- name: nginx

# newer image version

image: nginx:1.11.5

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

Here, we have to use replace instead of apply:

# format

$ kubectl replace -f --record

# example

$ kubectl replace -f new-nginx.yaml –record

[Need assistance with a different issue? Our team is available 24/7.]

Conclusion

In brief, our Support Experts demonstrated how to Configure Kubernetes Rolling and update maxSurge.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

0 Comments