Kubernetes Persistent Volume Claim is essentially a request to mount a Persistent Volume on a pod that meets certain requirements.

As part of our Server management service, Bobcares responds to all inquiries, no matter how big or small.

Let’s look at the Kubernetes Persistent Volume Claim in greater depth.

Kubernetes Persistent Volume Claim

A persistent volume (PV) in Kubernetes is an object that allows pods to access persistent storage on a storage device that is defined by a Kubernetes StorageClass. PVs, unlike regular volumes, are persistent, allowing stateful application use cases to be supported. A PV is a resource object in a Kubernetes cluster that survives the destruction of the pods that it serves.

PVs must be requested using PVCs (Persistent Volume Claims), which are storage requests. Instead of specifying a specific PV, PVCs specify the StorageClass that the pod requires. StorageClasses, which indicate properties of storage devices such as performance, service levels, and back-end policies, can be defined by administrators.

The PVC pattern in Kubernetes has the advantage of allowing developers to dynamically request storage resources without knowing how the underlying storage devices are implemented.

Static vs Dynamic Provisioning for Persistent Volume Claims

A volume mount and a PVC are required to bind a pod to a PV. Users can install PVs in pods without knowing the details of the underlying storage equipment thanks to these declarations.

For mounting PVs to a pod there are two options :

- Static configuration : This requires administrators to manually create PVs and define a StorageClass that matches the PVs’ criteria. A pod gains access to one of these static PVs when it uses a PVC that specifies the StorageClass.

- Dynamic configuration : When there is no static PV that matches the PVC, this happens. The Kubernetes cluster creates a new PV based on the StorageClass definitions in this case.

Creating a PVC and Binding to a PV

Here we will look how PVs and PVCs work. It is a synopsis of the full tutorial available in the Kubernetes documentation.

- Setting up a node

Firstly, Create a Kubernetes cluster with a single node and connect the kubectl command line to the control panel.

Then, on the node, make the following directory:

sudo mkdir /mnt/dataFinally, in the directory, create an index.html file.

- Creating a persistent volume

Firstly, to define a PV, create a YAML file similar to this one.

To create the PV on the node, use the following command:

kubectl apply -f https://k8s.io/examples/pods/storage/pv-volume.yaml - Making a PVC and binding it to a PV

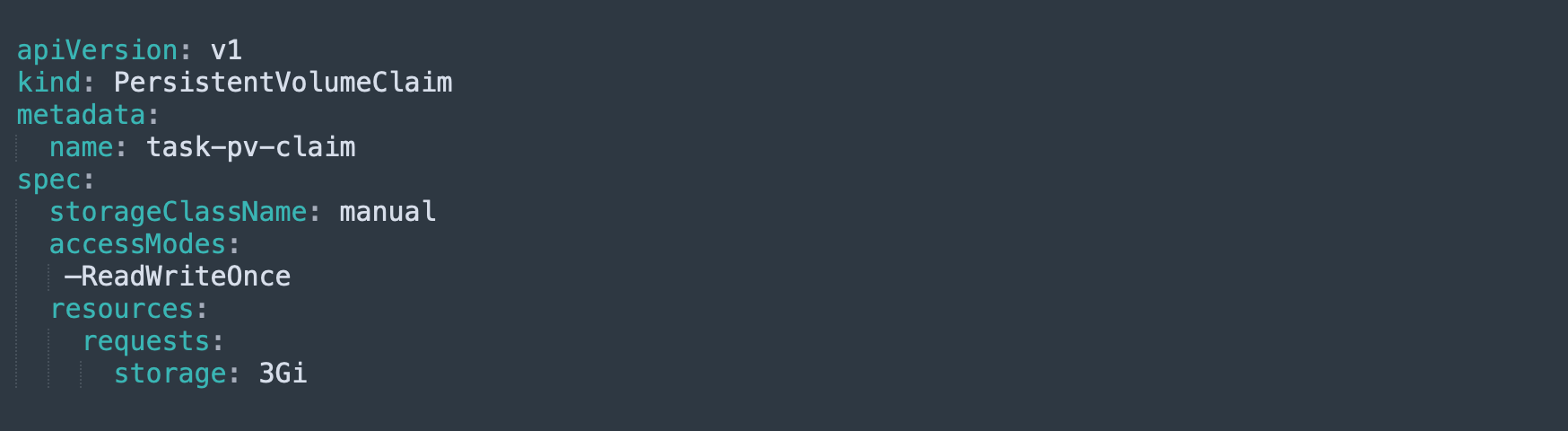

Create a PVC that requires a PV, subject to the conditions listed below in order to match the PV we created earlier.*Storage capacity of 3 GB or greater.

*Allow read/write access.Make a YAML file for the PVC that looks like this.

Then, to apply the PVC, run this command:

kubectl apply -f https://k8s.io/examples/pods/storage/pv-claim.yamlThe Kubernetes control panel locates the correct PV when we create a persistent volume claim. If it is discovered, it will bind the PVC to the PV.

Then, Run the following command to check the status of the previously created PV:

kubectl get pv task-pv-volume - Creating a pod and proving the volume claim for a long time

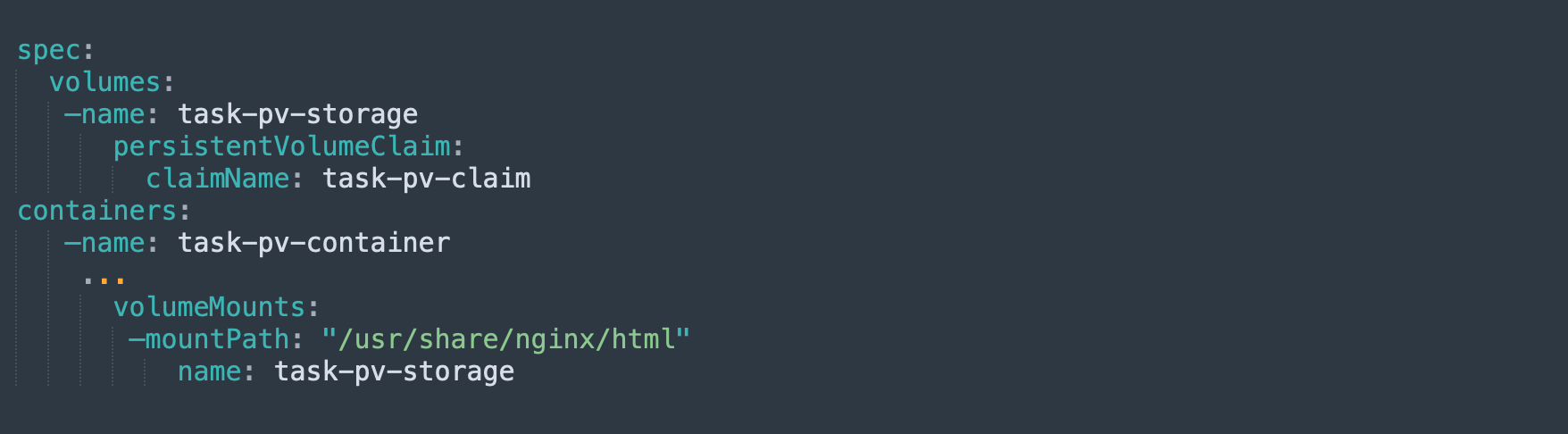

Finally, construct a PVC-based pod. Run the pod with the NGINX image, referencing the PVC we created earlier in the pod’s specification:

Install curl in the pod with a bash command, then run this command:

curl http://localhost/The contents of the index.html file created in step 1 should be displayed in the output. It should show that the new pod can use the PVC to access data in the PV.

Kubernetes Persistent Volume Claim Errors: Common Causes and Resolution

Kubernetes PVC can be difficult to use, which can lead to errors that are difficult to diagnose and correct. PVC errors can be divided into three categories: problems with PV creation, problems with PV provisioning, and changes in PV or PVC specifications.

Failed Attach Volume, Failed Mount, and Crash Loop Back Off are the most common PVC errors.

Failed Attach Volume and Failed Mount Errors

These two errors indicate that a pod was unable to mount a PV. The difference is that Failed Attach Volume occurs when a volume fails to detach from a previous node, and Failed Mount occurs when a volume fails to mount on the required path.

There are numerous possible causes of these two errors, including failure on the new node, too many disks attached to the new node, a network partitioning error, and failure of storage devices on the previous node.

Diagnosing the problem

Run the command describe pod and look in the Events section for the message indicating an error to diagnose issues like Failed Attach Volume and Failed Mount. Information about the cause should also be included in the message.

Resolving the problem

We’ll have to deal with the Failed Attach Volume and Failed Mount errors manually because Kubernetes can’t handle them automatically:

If Failure to Detach is the cause of the error, manually detach the volume using the storage device’s interface.

Check for a network partitioning issue or an incorrect network path if the error is Failure to Attach or Mount. If this isn’t the problem, try getting Kubernetes to schedule the pod on a different node, or look into and fix the problem on the new node.Crash Loop Back Off Errors Resulting from the Persistent Volume Claim

Crash Loop Back Off indicates that a pod has repeatedly crashed, restarted, and crashed. This error can occur as a result of corrupted PersistentVolumeClaims in some cases.

Diagnosing the problem

Check the logs from the previous container instance that mounted the PV, check deployment logs, and, if necessary, run a shell on the container to determine why it is crashing to see if it is due to a PVC.

Resolving the problem

If Crash Loop Back Off is caused by a PVC problem, try the following steps:

- Firstly, to enable debugging, scale the deployment to 0 instances.

- Then, using this query, get the identifier of the failed PVC:

kubectl get deployment -o jsonpath="{.spec.template.spec.volumes[*].persistentVolumeClaim.claimName}" failed-deployment - Then, create a new pod for debugging and use the following command to run a shell:

exec -it volume-debugger sh - Determine which volume is currently mounted in the /datadirectory and correct the problem that caused the pod to crash.

- Finally, exit the shell, delete the debugging pod, and reduce the number of replicas in the deployment to the required number.

[Looking for a solution to another query? We are just a click away.]

Conclusion

To sum up, our Support team explained the Kubernetes Persistent Volume Claim in detail.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

0 Comments