Redundancy, scalability, self-healing, and high availability are all benefits of using the Ceph File System with Proxmox. Bobcares, as a part of our Proxmox Support Services, offers solutions to every query that comes our way.

Ceph File System with Proxmox

CephFS carry out a POSIX-compliant filesystem and stores its data in a Ceph storage cluster. It also shares the majority of Ceph’s properties because it is based on Ceph. This includes high availability, self-healing, scalability, and redundancy. Ceph configurations can also be managed using Proxmox VE. This simplifies the process of setting up a CephFS storage. Running storage services and VMs on the same node is possible without having a noticeable performance impact because modern hardware provides plenty of RAM and processing power.

We must include our Ceph repository in place of the default Debian Ceph client in order to use the CephFS storage plugin. After adding, use the apt update and apt dist-upgrade to get the most recent packages. We must also make sure there aren’t any more Ceph repositories set up. Otherwise, there will be mixed package versions on the node, which would cause unexpected behavior or the installation will fail.

Setup Properties

The common storage properties are nodes, disable, and content, as well as the below specific properties:

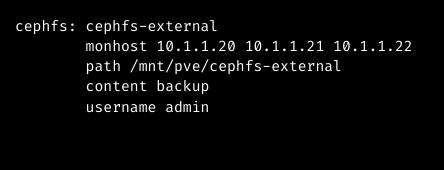

1. monhost: Addresses for the monitor daemons. Only necessary if Ceph is not already active on the Proxmox VE cluster.

2. path: The closest mount location. Optional; the default value is /mnt/pve//.

3. username: User ID for Ceph. Only necessary if Ceph is not operating on the Proxmox VE cluster and when it defaults to admin.

4. subdir: The subdirectory to mount in CephFS. The default is to /

5. fuse: Use FUSE to access CephFS rather than the kernel client. Defaults to 0.

An Example

An example of the configuration of an external Ceph cluster (/etc/pve/storage.cfg) is as follows:

Authentication

When installing the storage, the following is done automatically if Ceph is installed locally on the Proxmox VE. We must supply the secret from the external Ceph cluster if we use cephx authentication, which is by default enabled.

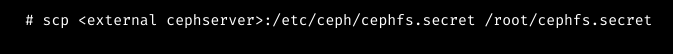

We must first make the file containing the secret accessible in order to configure the storage using the CLI. One method is to directly copy the file from the external Ceph cluster to a Proxmox VE node. The example below will copy it to the node’s /root directory where we run it.

Then, configure the external RBD storage using the pvesm CLI tool. The –keyring parameter, which must point to the secret file we transferred, should be used. Examples include:

We can copy and paste the secret into the right field when setting an external RBD storage using the GUI. In contrast to the rbd backend, which also includes a [client. userid] part, the secret is merely the key itself. The details will be kept at:

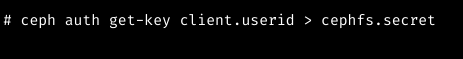

The code below, where userid is the client ID set up to access the cluster, can also be used to request a secret from the Ceph cluster as a Ceph administrator.

[Looking for a solution to another query? We are just a click away.]

Conclusion

To sum up, our Support team went over the Ceph file system with Proxmox details. Redundancy, scalability, self-healing, and high availability are all benefits of using the Ceph File System with Proxmox.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

0 Comments