Learn how to fix Kubernetes Back-off Restarting Failed Container error. Our Kubernetes Support team is here to help you with your questions and concerns.

How to Fix Kubernetes Back-off Restarting Failed Container Error

Did you know that the ‘back-off restarting failed container’ error is a common issue in Kubernetes?

It indicates that a pod is stuck in a restart loop. This error occurs when a Kubernetes container fails to start correctly and continuously crashes. By default, Kubernetes has a restart policy set to “Always,” meaning it will attempt to restart the pod upon failure.

If the pod keeps failing, Kubernetes applies a back-off delay between each restart attempt, with the delay increasing exponentially (10s, 20s, 40s, etc.) and capping at five minutes.

During this period, the error message ‘back-off restarting failed container’ is displayed.

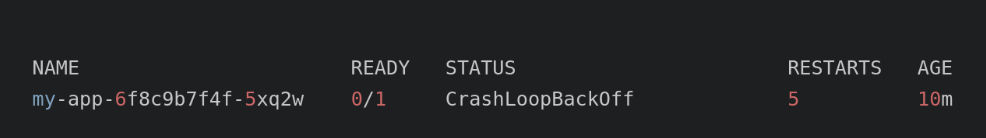

We can use the `kubectl get pods` command to check the pod state. For example:

kubectl get podsHere is a sample output:

This indicates that the pod `my-app-6f8c9b7f4f-5xq2w` has crashed five times and is waiting for the next restart attempt.

An Overview:

Common Causes of the ‘Back-off Restarting Failed Container’ Error

Several factors can cause a pod to fail to start and enter a crash loop. Here are some common causes:

- The pod may crash due to CPU or memory shortages. This can result from memory leaks in the application, misconfigured resource requests and limits, or simply because the application needs more resources than are available on the node.

- Issues in the deployment configuration, like incorrect image names, wrong environment variables, missing secrets, or invalid commands, can cause the pod to crash.

- If the pod relies on external services that are experiencing problems, it might crash. For instance, the pod might fail to resolve a hostname, connect to a database, or authenticate with an API.

- The pod may need certain dependencies to run properly. If these are missing, the pod might crash. For example, the pod might fail to load a module, execute a script, or run a command.

- Recent updates to the application code, container image, or Kubernetes cluster might introduce bugs, incompatibilities, or breaking changes.

This can cause the pod to crash due to issues such as failing to parse a configuration file, handle an exception, or communicate with another pod.

Troubleshooting Steps

Here are some steps to troubleshoot the ‘back-off restarting failed container’ error:

- We can use `kubectl logs pod-name` to examine the container’s logs for error messages. So, look for specific error messages that can indicate the root cause.

- Additionally, we can use `kubectl describe pod pod-name` to get detailed information about the pod’s status, events, and conditions. So, check for clues about the reason for the failure.

- Also, ensure the pod’s resource requests and limits are appropriate for the workload. We can adjust resource requests or limits if necessary.

- Furthermore, the image integrity and content must be verified. We can use a different image or rebuild the image.

- We can review the pod’s YAML file for errors or inconsistencies. So, pay attention to environment variables, commands, arguments, and volumes.

- Then, make sure all required dependencies are available and accessible. We need to check network connectivity and DNS resolution.

- Also, adjust the liveness probe’s `initialDelay`, `period`, and `failureThreshold` to prevent premature restarts. Then, verify the probe’s logic and ensure it correctly indicates the container’s health.

- Additionally, check the status of external services and their configurations. We need to ensure proper communication and authentication with external systems.

With these troubleshooting steps, we can identify and resolve the root cause of the ‘back-off restarting failed container’ error.

[Need assistance with a different issue? Our team is available 24/7.]

Conclusion

In brief, our Support Experts demonstrated how to fix the Kubernetes Back-off Restarting Failed Container error.

0 Comments