Stuck with the “Package org.apache.hadoop.io Does Not Exist” Error? Our Apache Support team is here to help you with your questions and concerns.

How to Fix “Package org.apache.hadoop.io Does Not Exist” Error

Is your Hadoop program throwing a tantrum with the “package org.apache.hadoop.io does not exist” error?

Fear not, our experts are here to help.

This error is often due to different issues related to dependencies, classpaths, APIs, and configurations. Today, we are going to explore the common causes of this error and how we can fix them.

- Missing Hadoop Dependencies

- Incorrect Classpath

- Old Hadoop API

- Missing Maven Dependencies

- Incorrect Hadoop Version

- Missing Hadoop Configuration

- Missing Hadoop JARs

Missing Hadoop Dependencies

In this scenario, the project does not include the necessary Hadoop libraries.

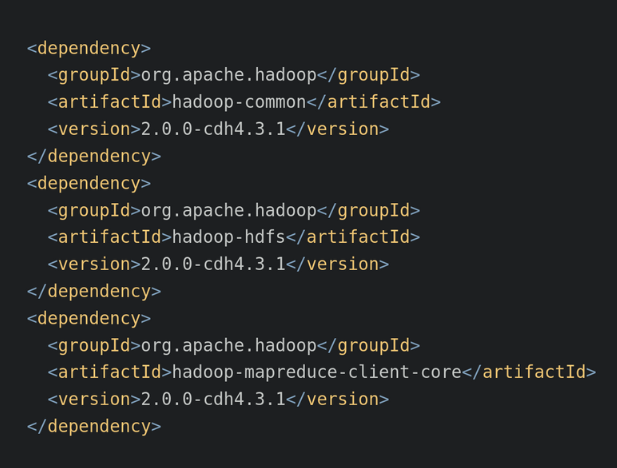

We can resolve the issue by making sure the build configuration includes the required Hadoop dependencies. If we are using Maven, add the following dependencies to the `pom.xml` file:

Incorrect Classpath

Another cause behind the error is the Java compiler not finding the necessary Hadoop classes due to an incorrect classpath.

This can be fixed by setting the Hadoop classpath correctly. So, use the following command to get the correct classpath:

export CLASSPATH=`hadoop classpath`:.: Old Hadoop API

If the code is using the old Hadoop API (`org.apache.hadoop.mapred`) we are likely to run into the error.

We can resolve this by updating the code to use the new Hadoop API (`org.apache.hadoop.mapreduce`) as seen here:

- First, look for references to `org.apache.hadoop.mapred` in the code.

- Then, replace `org.apache.hadoop.mapred` with `org.apache.hadoop.mapreduce` for all classes and methods.

- Next, make sure the configuration uses the new API by setting the `mapreduce.framework.name` property to `local` or `yarn`.

- Finally, run the updated code to verify it works with the new API.

Missing Maven Dependencies

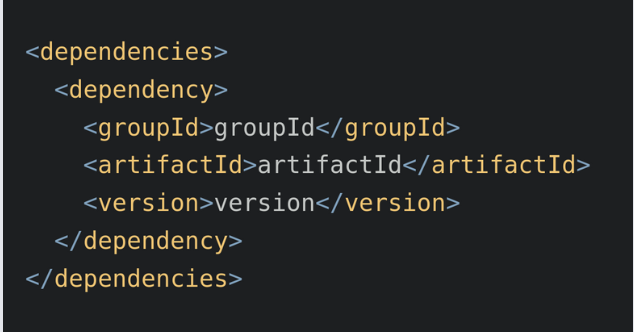

Another likely cause for the error is missing necessary Maven dependencies.

We can resolve this by including the needed dependencies in the `pom.xml` file.

Also, make sure the project structure is correct, with `pom.xml` in the root directory. We can add dependencies like this:

Remember to update the project by running `mvn clean install`. Also, verify the dependencies are correctly included.

Incorrect Hadoop Version

If the code is written for a different Hadoop version than the one installed, we will run into an error.

So, make sure the code is compatible with the installed Hadoop version.

- First, run `hadoop version` to see the installed version.

- Then, adjust the code to match the installed Hadoop version, which may involve updating dependencies or configurations.

- Next, test the code to ensure it runs without errors.

Missing Hadoop Configuration

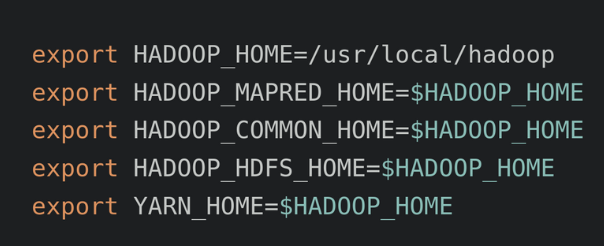

In case the Hadoop configuration is not properly set we will run into the error message.

So, set the Hadoop configuration correctly with these commands:

Missing Hadoop JARs

If the necessary Hadoop JARs are not included in the project’s classpath, we will get an error message.

Hence, make sure that all required Hadoop JARs are present in the classpath.

[Need assistance with a different issue? Our team is available 24/7.]

Conclusion

By addressing these common causes and their fixes, we can fix the “package org.apache.hadoop.io does not exist” error and get our Hadoop project back on track with a little help from our Support Experts.

0 Comments