Overview

- Importance of Innodb_flush_log_at_trx_commit Variable

- Application of Innodb_flush_log_at_trx_commit Variable

- Configuration of Innodb_flush_log_at_trx_commit Variable

- Conclusion

Importance of Innodb_flush_log_at_trx_commit Variable

The MySQL InnoDB storage engine’s innodb_flush_log_at_trx_commit setting is essential for ensuring the consistency and dependability of database transactions. We can use this variable to set the frequency at which InnoDB flushes its transaction logs to disk.

The memory (InnoDB Buffer Pool) carries out the majority of InnoDB’s operations. Also, record any new changes in memory in the transaction log (InnoDB Log File). It will flush (write) data to the storage disk (durable storage) from the transaction log.

We must save all transaction data on hard disk storage in order to provide data durability. But think about what would happen on a busy system if InnoDB tried to flush (write) data to a slow-running disk with each transaction commit. Thus, how can we handle the scenario when we have to keep track of every transaction while still ensuring optimal system performance? We can instruct InnoDB when to flush (write) data to disk based on the system, which is how InnoDB handles this scenario.

In InnoDB, we can configure transaction handling to either write to the log and flush to disk for every transaction commit, write to the log and flush data to disk at a specified interval, or write to the log for every transaction commit but only flush to disk at intervals, thus balancing between durability and performance according to the needs.

Let’s see why we consider it as an important factor:

1. Durability: The variable determines the database changes that are safely put on the disk. We can determine the degree of transaction persistence by setting it to different values.

2. Transaction Logging: Before changes are written to the real data files, InnoDB stores them in a transaction log. This log makes sure that in the case of a crash or system failure, transactions can be recovered.

3. Performance vs. Durability Trade-off: We can find a balance between durability and performance with this variable. The default setting of 1 causes InnoDB to flush the transaction log to disk upon each commit of a transaction, guaranteeing maximum durability but possibly affecting performance because of frequent disk writes. If we set it to 0, InnoDB will only flush the log once every second, which can boost performance but may cause some data loss in the event of a crash.

4. Replication: The consistency promises between the master and its replicas may be impacted by the innodb_flush_log_at_trx_commit setting in systems that use MySQL replication. Various configurations for replicas are possible based on the desired balance between consistency and performance.

Application of Innodb_flush_log_at_trx_commit Variable

We can apply 3 values for the innodb_fush_log_at_trx_commit variable. Let’s see how these factors affect the behaviour of the flush-to-disk:

innodb_flush_log_at_trx_commit=0When

innodb_flush_log_at_trx_commit=1InnoDB will write the log buffer to the log file at each commit when we set

innodb_flush_log_at_trx_commit=2Data shifts from the InnoDB buffer to the operating system’s cache during the buffer-to-log process, which is writing from the InnoDB buffer pool to the InnoDB transaction log file. It is not actually written to the persistent storage. Thus, data loss of up to one second is possible if we set innodb_fush_log_at_trx_commit to either 0 or 2.

As the value of innodb_fush_log_at_trx_commit is 1, InnoDB forces the operating system to flush data to persistent storage. Writing data to disk is a sluggish process that requires I/O blocking in order to finish. Therefore, there’s a potential that if we use this option, the number of transactions per second will decrease.

Keep in mind that MySQL will automatically commit transactions by default. Large database restorations benefit greatly from setting innodb_fush_log_at_trx_commit to 2. Although there are differing views on this matter, it is best to test this for ourselves. In my experience, the shortened healing time was incredibly beneficial. The Data Definition Language (DDL) changes flush the InnoDB log regardless of the innodb_fush_log_at_trx_commit parameter.

After seeing the behaviour of each value, we must now select the variable value based on the needs of the application.

InnoDB won’t flush to disk after each commit transaction if the value is 0. Instead, it will write from the buffer to the log once every second. The issue with this option is that data loss of up to one second may occur in the event of a power outage or system crash. We can set the value to 1 to force InnoDB to flush to disk with each commit of a transaction if data security is the top priority. But in this situation, performance can decline. If the primary objective is performance, we can set the value to 2. Because InnoDB writes to disk only once per second rather than for each commit of a transaction, it can significantly increase the performance. The transaction log contains recoverable data in the event of a crash or power outage.

Configuration of Innodb_flush_log_at_trx_commit Variable

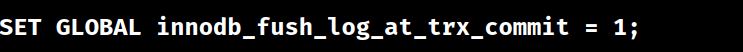

It is possible to set the innodb_fush_log_at_trx_commit dynamically without having to restart the server, and its scope is GLOBAL. The following command line option allows us to set innodb_fush_log_at_trx_commit dynamically:

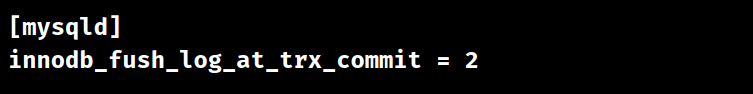

Also, we can set it as follows on the config file:

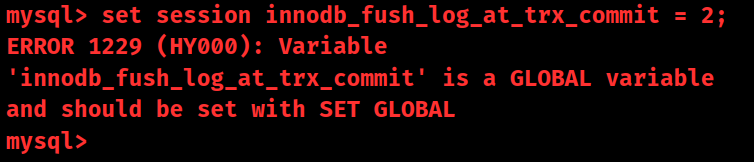

A server restart is necessary. Prior to changing the settings file, turn it on dynamically to assess the effects. The following error may appear if we attempt to set innodb_fush_log_at_trx_commit as session-level variables:

The innodb_flush_log_at_timeout variable specifies the frequency at which InnoDB flushes log files. The frequency has a default value of 1 and a range of 1 to 2700 seconds. In the event of a power outage or system crash, the likelihood of losing data increases with this number. For instance, if we set this value to 4 seconds, we could lose data for up to 4 seconds in the event of a power outage. In a replication topology, we can leave innodb_fush_log_at_trx_commit = 1 as the default setting to preserve data consistency and durability.

[Need to know more? Get in touch with us if you have any further inquiries.]

Conclusion

In conclusion, the innodb_flush_log_at_trx_commit setting is crucial for managing the balance of data durability to performance in InnoDB, ensuring the dependable storing of database transactions and their recoverability in the event of an error.

The innodb_flush_log_at_trx_commit variable in InnoDB is essential for preserving the integrity of database transactions. The setup affects the frequency of the logs flushing to disk. This, in turn, affects how durable and performant the database system is. With the flexibility to fine-tune their database configurations to specific requirements, administrators can now balance the demands of their applications’ performance requirements with the requirements of data integrity. Finding the ideal balance between efficiency and dependability in MySQL systems requires an awareness of and proper configuration for this variable, regardless of whether data integrity or speed optimization is the top priority.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

0 Comments