This article depicts a detailed explanation of the Kinesis Firehose Failure Handling mechanism. Bobcares, as a part of our AWS Support Services offers solutions to every query that comes our way.

Table of Contents

- Introduction To Kinesis Firehose

- Reasons Behind The Kinesis Firehose Failure

- Kinesis Firehose Failure Handling Mechanism

- Conclusion

Introduction To Kinesis Firehose

AWS’s fully managed solution for ingesting, transforming, and loading (ETL) real-time streaming data into data lakes, data stores, and analytics tools is called Amazon Kinesis Firehose. By taking care of numerous tasks, like buffering, compression, and encryption, automatically, it streamlines the process of capturing and loading streaming data.

The main features include ease of use, scalability, Durability, reliability, integration with AWS services, and more.

A delivery stream on Amazon Kinesis Firehose receives data written by the Kinesis Firehose destination. When we designate an Amazon S3 bucket or an Amazon Redshift table in the delivery stream, Firehose automatically moves the data there. The Kinesis Producer destination should be used in order to write data to Amazon Kinesis Streams. We can use the Amazon S3 destination to write data directly to Amazon S3.

Reasons Behind The Kinesis Firehose Failure

Although Amazon Kinesis Firehose is a strong and dependable service, problems or failures can occur with any technology in specific situations. Kinesis Data Firehose retries until the configured retry duration expires if it runs into problems delivering or processing data. It also backs up the data to the configured S3 backup bucket in the event that the retry duration expires before the data is successfully delivered. And continues to attempt delivery until the retention period expires if the destination is Amazon S3 and delivery fails, or if delivery to the backup S3 bucket fails. Records for DirectPut delivery streams are stored for 24 hours by Kinesis Data Firehose. We can modify the retention period for a delivery stream whose data source is a Kinesis data stream.

In the event that the data source is a Kinesis data stream, the DescribeStream, GetRecords, and GetShardIterator operations are repeatedly attempted by Kinesis Data Firehose infinitely.

Check the IncomingBytes and IncomingRecords metrics to see if there is any incoming traffic if the delivery stream uses DirectPut. Make sure to catch exceptions and try again if we’re using the PutRecord or PutRecordBatch. We advise using multiple retries and an exponential back-off with a jitter in the retry policy. Additionally, if we utilize the PutRecordBatch API, be sure that the code continues to verify the response’s FailedPutCount value even in cases where the API call is successful.

Examine the IncomingBytes and IncomingRecords metrics for the source data stream if the delivery stream is derived from a Kinesis data stream. Furthermore, confirm that the delivery stream is emitting the DataReadFromKinesisStream.Bytes and DataReadFromKinesisStream.Records metrics.

The following factors may contribute to an unsuccessful delivery between Kinesis Data Firehose and Amazon OpenSearch Service:

Delivery Location Incorrect

Verify that the ARN we are using and the delivery destination specified for the Kinesis Data Firehose is correct. We can view the DeliveryToElasticsearch.Success metric in Amazon CloudWatch to see if the delivery was successful. It is confirmed that the deliveries are unsuccessful when the DeliveryToElasticsearch.The success metric value is zero. See Delivery to OpenSearch Service in Data delivery CloudWatch metrics for additional details regarding the DeliveryToElasticsearch.Success metric.

No Inbound Data

Verify that Kinesis Data Firehose is receiving data by keeping an eye on the IncomingRecords and IncomingBytes metrics. When those metrics have a zero value, it indicates that no records are reaching the Kinesis Data Firehose.

Check the Kinesis data stream’s IncomingRecords and IncomingBytes metrics if the delivery stream uses Amazon Kinesis Data Streams as a source. If there is incoming data, it is indicated by these two metrics. The absence of any records reaching the streaming services is confirmed by a value of zero.

Examine the DataReadFromKinesisStream and DataReadFromKinesisStream.Bytes files. Metrics are recorded to confirm if data arrives at Kinesis Data Firehose from Kinesis Data Streams. See Data ingestion through Kinesis Data Streams for additional details regarding the data metrics. Rather than indicating a problem between Kinesis Data Streams and Kinesis Data Firehose, a value of zero may indicate a failure to deliver to OpenSearch Service.

Additionally, we can verify that the Kinesis Data Firehose PutRecord and PutRecordBatch API calls are being called correctly. In case we’re not observing any incoming data flow metrics, make sure to examine the producer handling the PUT operations. See Troubleshooting Amazon Kinesis Data Streams Producers for additional details on resolving producer application problems.

Kinesis Data Firehose Logs Disabled

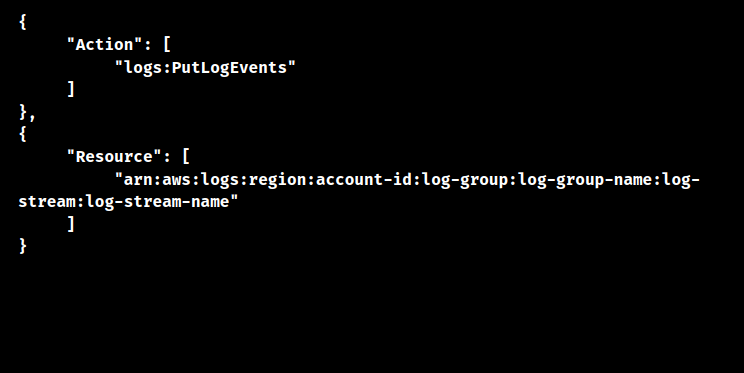

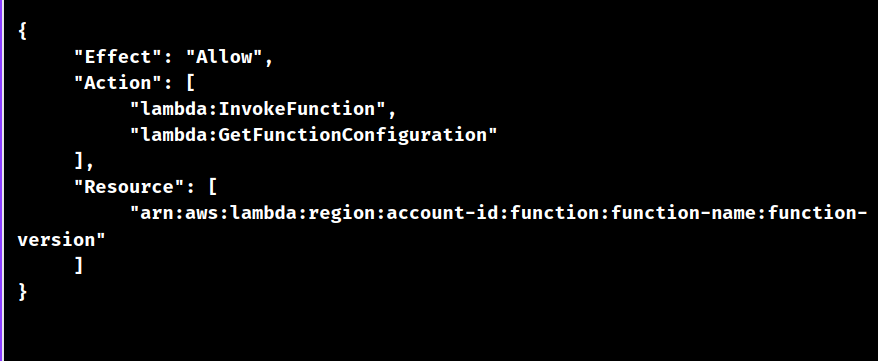

Verify that Kinesis Data Firehose logging is turned on. If not, there is a delivery failure as indicated by the error logs. Next, look in CloudWatch Logs for the /aws/kinesisfirehose/delivery-stream-name log group name. The following authorizations are necessary for the Kinesis Data Firehose role:

Check to see if we’ve given Kinesis Data Firehose access to a public OpenSearch Service destination. If we use the data transformation capability, we must also grant AWS Lambda access.

Permission Issues

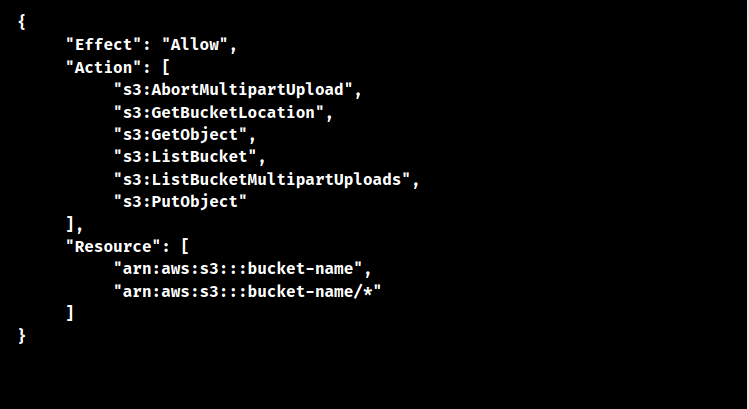

Several permissions are necessary depending on how the Kinesis Data Firehose is configured. To deliver records to an Amazon Simple Storage Service (Amazon S3) bucket, the following permissions are required:

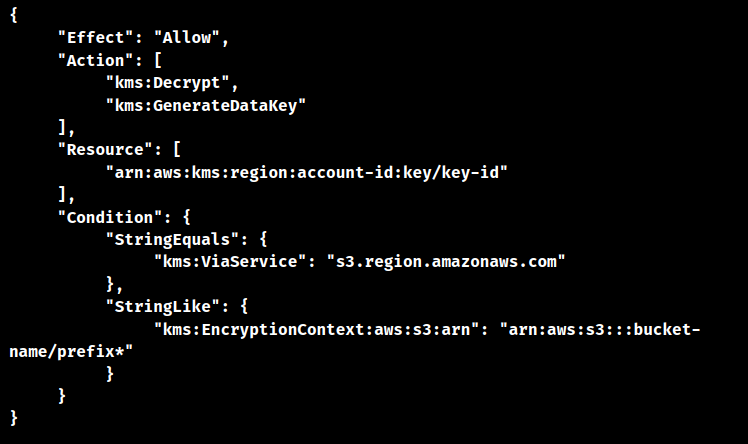

The following permissions are required if the Kinesis Data Firehose is encrypted at rest:

To grant permissions for OpenSearch Service access, modify the policy as follows:

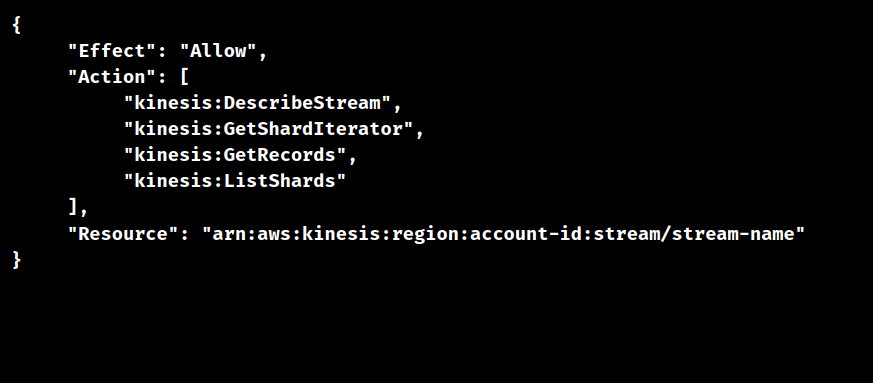

If we’re using Kinesis Data Streams as a source, make the following changes to the permissions:

We can update the policy to configure Kinesis Data Firehose for data transformation as follows:

Troubles Invoking AWS Lambda Functions

Check the ExecuteProcessing Kinesis Data Firehose. Use the Success and Errors metrics to confirm that the function has been called by Kinesis Data Firehose. If the Lambda function hasn’t been called by Kinesis Data Firehose, see if the invocation time exceeds the timeout parameter. It’s possible that the Lambda function needs more memory or a larger timeout value in order to finish on time. See Using invocation metrics for additional details on invocation metrics.

Check the Amazon CloudWatch Logs group for /aws/lambda/lambda-function-name to determine the reasons why Kinesis Data Firehose isn’t calling the Lambda function. The failed records are sent to the S3 bucket as a backup in the processing-failed folder in the event that data transformation fails. The error message for failed attempts is also included in the records in the S3 bucket.

Domain Health for OpenSearch Service Problems

Verify the following metrics to make sure the OpenSearch Service is operating well:

CPU utilization: The data node may not be able to react to any incoming data or requests if this metric is continuously high. The cluster may need to be scaled.

JVM memory pressure: The cluster may be causing memory circuit breaker exceptions if the JVM memory pressure is continuously higher than 80%. These exclusions may make it impossible for the data to be indexed.

ClusterWriteBlockException: If additional storage space is required or if the domain is experiencing excessive JVM memory pressure, this indexing block will appear. New data cannot be indexed if a data node is not large enough.

Kinesis Firehose Failure Handling Mechanism

If we are sending batch messages to Redshift via Firehose using Lambda. Firehose will try again in 24 hours and delete the message. After X failed attempts, I’d like to move failed messages to another queue, preferably without cross-checking the target Redshift database.

Failures to PutRecord to the Firehose Kinesis stream, rather than the Kinesis -> S3 -> Redshift flow, are being tracked.

We can specify the number of retries when we initialize the Firehose client. When an exception is received (failure to PutRecord to the stream), Firehose will automatically try up to the max retries we set; this is done under the hood, in the SDK, so we won’t know the retries have been exceeded until the exception bubbles up to the function. When we get this exception, we can assume that the number of retries has been reached. This exception handling may include sending the message to a SQS queue.

Instead of using a firehose in a straightforward manner, this can be done quite differently in the manner below.

1. One possible way to write to S3 is to have the lambda function call firehose.

2. Develop firehose-reading Kinesis analytics.

3. After kinesis analytics, configure various streams. Once a firehose is loaded into Redshift, successful records (in-stream) will transfer to it. There will be another firehose that loads into S3 with Error Records (error-stream).

Records that load successfully into Redshift and records that don’t pass muster are sorted to S3.

[Need to know more? Get in touch with us if you have any further inquiries.]

Conclusion

In conclusion, Kinesis Firehose’s failure handling mechanism is an essential component of its dependability and data delivery process, offering protections to handle and resolve any problems that might emerge throughout the streaming data delivery pipeline. Users are able to tailor these mechanisms to their own needs and guarantee the stability of their workflows for processing data.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

0 Comments