What has a royal wedding got to do with fashion? Apparently, a lot. In April 2011, within minutes of Kate Middleton stepping on the red carpet, the the wedding dress designer’s name “Sarah Burton” was trending in Twitter. The interest in the dress was enormous, and fashion houses around the world immediately started offering “Kate wedding dress” replicas.

Every fashion house worth their salt knows how to cash in on such trends, and they use social media extensively to drive customers to their website. It is therefore important for these sites to be able to deal with such surges in traffic they receive. However, not all websites are well equipped to handle high traffic, and some do crash when traffic spikes happen.

This was exactly the case with a large apparel website that contacted us for assistance. Every time they launched a new marketing campaign in social media, the site received a surge in traffic that was 15-20 times the normal traffic volume. The site would then throw a slew of errors and just refuse to load again. Every time this happened, the website owner would amp up the server resources, until one day it felt like a futile effort. Bobcares was called in to fix this problem once and for all.

I remember the day when this customer contacted us. A new social media campaign had led to yet another website crash, and the customer wanted someone to salvage the situation. The request was tagged as an emergency, and our performance engineering expert Hamish Lawrence took over the case. If I know anything about Hamish, it is that he relishes a challenge, and gets the job done.

“The database couldn’t handle the volume of connections it was receiving”

Hamish dove into the problem and his first priority was to prevent another crash. In Hamish’s words, “The moment I saw the website I knew something was wrong with the database. There were connection errors all over the place. It looked like the database couldn’t handle the volume of connections it was receiving. That’s what I wanted to fix first“.

Let me explain what he meant by that. When visitors came to the website, a variety of options would be displayed which allowed them to choose from a wide range of offerings. For eg., a visitor could choose only black dresses of “XS” size that belonged to only a couple of brands. To make such a customized display possible, the site would send a complex query to the products database asking for the exact combination of features. If the visitor changed display settings, another such database query would be executed. Considering that a visitor goes through at least 5 pages, the peak traffic would generate thousands of simultaneous queries that the database was unable to handle.

In order to avoid another crash, the database settings were reconfigured to allow the site to handle about 7000 visitors simultaneously. That secured the site for the immediate future.

“Sooner or later, the database locks are going to crash the site again.”

However, as Hamish recounted, adjusting the connection limit wouldn’t be the end of issues. “I saw a lot of database write locks. Website read requests were waiting for the locks to be released. Sooner or later, the database locks are going to crash the site again,” Hamish says.

Let me give you a bit of background on that. This website used a dynamic pricing strategy to offer competitive rates based on what other companies were offering. A “dynamic pricing” plugin was used to update the product information every 30 mins or so. When such updates happened, the server put a “write lock” on the data to make sure half-baked information was not presented on the website. When a “write lock” was activated, all other requests to the database would be put on hold. So, whenever products were updated by the dynamic pricing plugin, site visitors would receive a time-out error. As the number of products and site visitors increased, this issue was just going to get worse further down the road.

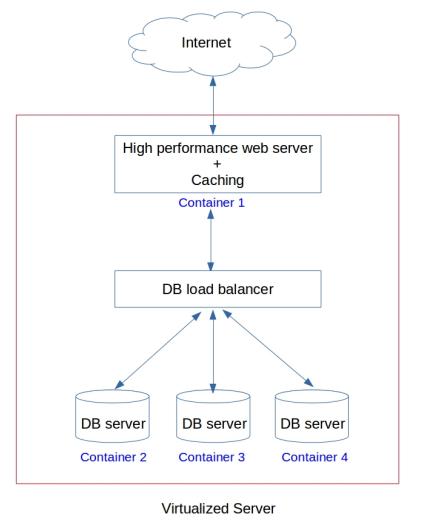

To solve this, we formulated a solution where there would be multiple copies of the website database, and writes would be restricted to just one database. These databases would be linked with each other so that a change in one would be replicated in all others. In this way, customers would never receive a database time-out error. To make things even better, we reduced the number of read requests hitting the database by using “memory caches”. Caches are copies of the most popular content that’s stored in the server memory. So, whenever a visitor clicked on a popular link, the database would not not accessed. Instead, the page would be quickly served from the super-speedy server memory.

“I chose light-weight virtualization as it offered better performance and no vendor lock-ins.”

The new solution consisted of one web server, one caching system, one load balancer and three database servers. To implement this, Hamish had a couple of options – “I wanted the solution to be easily scalable. For this, I could put the servers in a cloud service or I could use virtualization. Finally, I chose light-weight virtualization as it offered better performance and no vendor lock-ins.”

Let me explain that. This website was expected to grow to at least double the current size within one year, and it was important that the solution we designed would be easily scalable. When talking about scalability, most website owners think of a cloud solution. Yes, it is easy to scale up and down resources as per changing needs, but unless a site spends heavily on RAID arrays (like Amazon EBS RAID arrays), the I/O performance will be less than that of a dedicated server. This is because cloud systems work on shared resources, and every time a cloud user sends in large amount of “write” requests, the system will slow down. So, from a performance point of view, a dedicated server was a better option.

Another consideration was vendor lock-ins. Most cloud providers have proprietary systems that make migration of multi-server systems a real pain. In contrast, a light-weight virtualization solution like LXC makes migration to other servers or even another data center very easy. You just need an LXC server installed in the destination server. So, for this website, we opted for a light-weight server virtualization solution in which each server instance was run as a container.

3 database containers, load balancer and web server implemented as containers in a single dedicated server.

The new hosting solution was implemented using just one high performance dedicated server, keeping the total cost to the customer almost the same. By using virtual servers for various services, it would easy to scale up the resources, or even move the services to another server if the resources in the current server were exhausted. So, with a few changes in service architecture, this website was fully equipped to handle a growth of up to 4 times the current volume.

The best hosting solution for your site

Website owners of growing sites often get the advise to beef up server resources. Though it might help for small websites, performance issues of larger sites are more likely to be related to the website architecture. With a few changes in website architecture, you can get your site ready to meet the growth in website traffic. The secret is in finding out where the exact performance bottleneck is. Getting your site periodically audited by a performance expert can help you keep your site responsive.

Bobcares helps high traffic websites and online businesses deliver reliable, secure services through proactive performance management, security management and 24/7 emergency server administration.

An Excellent Case Study. Many times customers come to us asking how to improve the performance of their heavy-traffic websites. Would like to see some articles on how to use different tools to identify application and database bottlenecks.