It is no news that Google and other search engines consider site speed as a ranking signal. Studies show that even customer confidence is severely affected by factors such as slow site speed, un-secured web sites, etc. So, web masters all over the world now consider site speed as a critical factor in their business.

For example, recently a business owner contacted us to fix their WordPress site performance issues. The site owner mentioned high visitor bounce rates, and customers complaining about long wait times. The site received up to 1500 visitors an hour, but pages took up to 10 seconds to load during peak hours, causing customers to complain.

Once the site owner signed up for our Web Server Management service, we first performed a full benchmark audit on their servers to figure out performance bottlenecks. We found that the primary reasons for poor performance were due to bulky Apache servers, outdated PHP technology and un-optimized caching systems. So, we created a new web infrastructure using light-weight services that freed up resources and improved the site speed from 10 seconds to less than 2 seconds average.

This is the story of how we improved the loading speed of this busy WordPress website.

Building a light web infrastructure

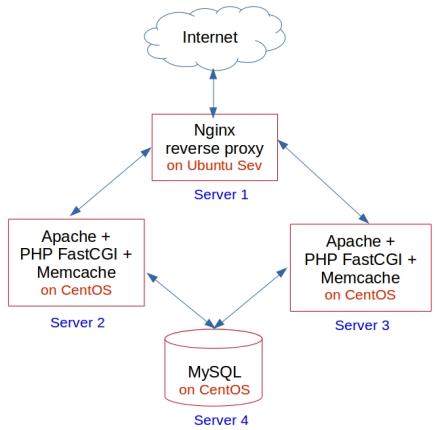

The current web site infrastructure consisted of two Apache servers which were boosted by caching systems and speed optimized PHP. An Nginx reverse proxy sat in front of the Apache servers, and acted as a load-balancer and a first-level caching server.

WordPress site infrastructure using Nginx, Apache, FastCGI and Memcache

As you can see, the current infrastructure used 4 servers. In this system, performance was degraded by heavy operating systems, bulky Apache servers and inefficient caching methods.

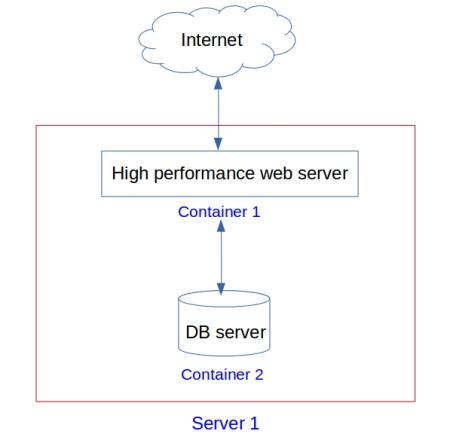

So, we designed a new system that cut down resource wastage by using minimal operating systems and light-weight server virtualization. In the new design, web and database servers ran in light-weight virtual machines, and were powered by a lean operating system that induced minimal resource overhead. This way, we were also able to reduce the number of servers from 4 to just 1.

New single server infrastructure which ran web and database servers as OS virtualized containers.

Building light web services using OS virtualization

When creating the new design, we started with the basics. We asked ourselves – Do we need such an elaborate infrastructure? Right now the infrastructure consisted of two Apache servers, a database server and an Nginx reverse proxy. To upgrade this system meant upgrading resources in the physical servers, or adding new servers to the cluster. It was a pretty complex setup, with resource overheads imposed by full scale operating systems in each of these individual servers.

For the new infrastructure, we wanted a simpler solution, which avoided the software bulk, and made efficient use of available resources. Light weight virtualization solutions (aka OS virtualization) seemed to be the ideal fit because it depended only on the Linux kernel to run, and used minimal images of application services to run the websites. We considered Docker and LXC for this purpose, and found that LXD/LXC would give the same resource saving benefits while maintaining an interactive server environment which the customer was used to.

Our light weight virtualization solution had the further advantage that web/database services could be easily migrated to another high power server or a cloud system at a later date using a simple container migration tool. This way the web site owner would never be tied down to a inflexible legacy system.

So, now we had an infrastructure design which cut down on excess fat from the operating system layer. That freed up about 20% more of server resources and further enabled faster loading of websites.

Creating a lean and mean web server

Till this point we’d succeeded in slimming down the OS layer. Now, we wanted to get rid of the bulky Apache and PHP processes. Apache is not the best option to deal with simultaneous high traffic, and legacy PHP systems such as FastCGI is no longer the best out there. Nginx did help in serving static content faster, but several interactive features in the site required a fast PHP compiler in the back end.

So, our primary focus was on getting a good PHP engine. PHP-FPM or FastCGI Process Manager was the logical next choice for a faster PHP compiler, but we’d hit on a promising new technology called HipHop Virtual Machine (HHVM) which we’d implemented successfully in many of our internal servers. So, we used that in place of the current FastCGI engine for the WordPress site.

Putting it all together

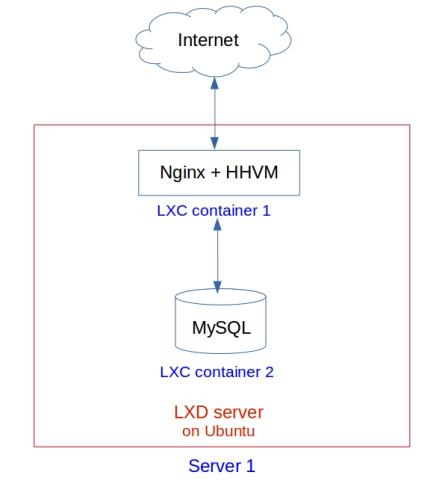

Now, the web site architecture looked like this:

WordPress web site using Nginx and HHVM on LXD/LXC over Ubuntu

The three web servers required in the earlier design was consolidated into a single light-weight LXC container running Nginx web server and HHVM. The server settings were tweaked for optimal caching performance. The MySQL server was ported to a MySQL LXC container, and was optimized for performance using InnoDB.

Testing the web site performance

This new system was then tested using ApacheBench against the older system. The results were as below:

.

ApacheBench test in the old infrastructure (Nginx load balancer in front of Apache servers)

Server Software: nginxServer Hostname: mywordpress.comServer Port: 443SSL/TLS Protocol: TLSv1.2,ECDHE-RSA-AES256-GCM-SHA384,2048,256Document Path: /Document Length: VariableConcurrency Level: 50Time taken for tests: 44.174 secondsComplete requests: 500Failed requests: 0Total transferred: 92070500 bytesHTML transferred: 91376000 bytesRequests per second: 11.32 [#/sec] (mean)Time per request: 4417.433 [ms] (mean)Time per request: 88.349 [ms] (mean, across all concurrent requests)Transfer rate: 2035.40 [Kbytes/sec] received

ApacheBench test in the new Nginx + HHVM LXC containers

Server Software: nginxServer Hostname: mywordpress.comServer Port: 443SSL/TLS Protocol: TLSv1.2,ECDHE-RSA-AES256-GCM-SHA384,2048,256Document Path: /Document Length: VariableConcurrency Level: 50Time taken for tests: 8.206 secondsComplete requests: 500Failed requests: 0Total transferred: 91303500 bytesHTML transferred: 90619500 bytesRequests per second: 60.93 [#/sec] (mean)Time per request: 820.579 [ms] (mean)Time per request: 16.412 [ms] (mean, across all concurrent requests)Transfer rate: 10865.93 [Kbytes/sec] received

As you can see, the average time per request improved from 88.349 ms to 16.412 ms when using the new web server setup. That’s a 538.31% improvement in site loading speed.

The average loading speed of the site varied from 1.1 seconds to 2.5 seconds as per external site speed checker tools like Pingdom. So, in comparison to the previous infrastructure design where the loading speed ranged from 7 to 10 seconds, our new solution achieved a 5 times speed improvement which put this site in the top 25% of the fastest sites on the internet.

Bobcares helps web sites, web hosts and other online businesses deliver lightning fast, secure services through infrastructure design, preventive maintenance, and 24/7 emergency server administration.

I appreciate you sharing this blog post. Thanks Again. Cool.

Thank you